Daniel Shiffman

People occasionally ask me how to get started with procedural generation.

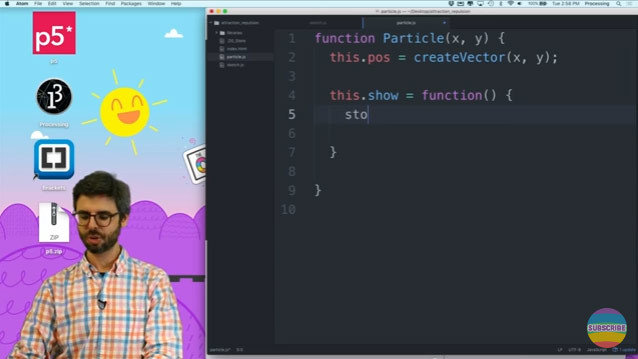

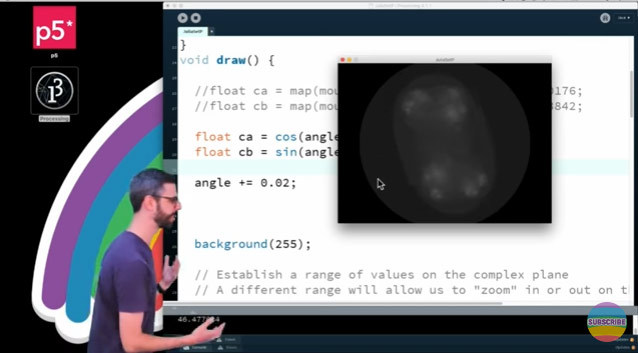

When someone asks me how to learn how to program, everything else being equal, I generally suggest that they start with Processing, because it’s an accessible platform with instant visual feedback and tons of resources.

For learning procedural generation specifically, I’ve pointed people to various resources in the past. But I think what I should be recommending to most people is Daniel Shiffman’s creative computing resources.

Daniel is the author of Nature of Code, a book about using natural principles to create emergent software and has created hundreds of videos of creative coding with processing. You can learn Poisson-disc sampling, A* pathfinding, genetic algorithms, strange attractors, and dozens of other useful things.

All of the stuff he talks about are great building blocks for generating your own creative projects, and even for experts there’s a lot here to learn from.