Universal Adversarial Perturbations

Now for the recent developments in adversarial image mis-classifications: fixed patterns that can cause most images to be mis-categorized.

This research by Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, Omar Fawzi, and Pascal Frossard has lead to the discovery that there are patterns that can perturb nearly every image enough to cause an image recognition neural net to fail to identify it most of the time.

Earlier research into adversarial patterns required that you solve an optimization problem for each image. The universal patterns don’t need to do this: they can just add the pattern to every image, which saves a lot of time and effort.

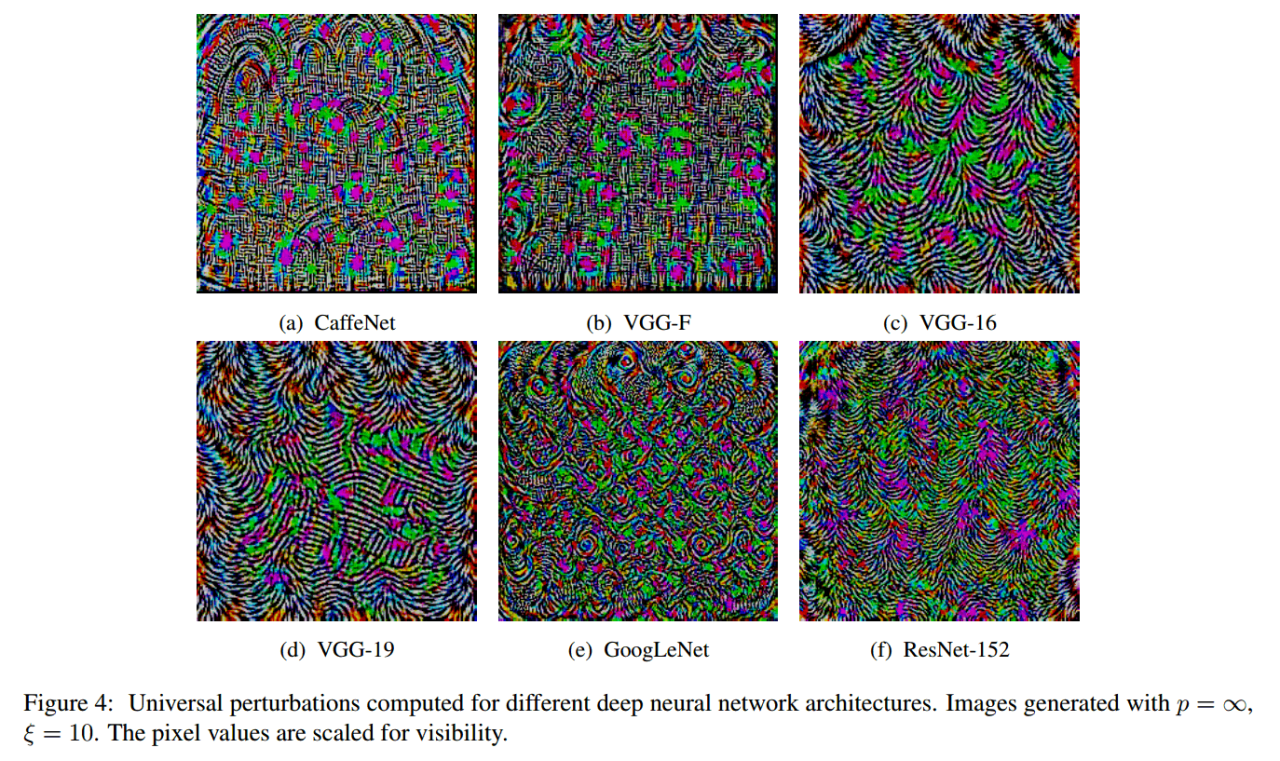

Interestingly, despite the universal patterns being slightly different between different neural net architectures, they are still universal enough that one pattern works pretty well on all of the neural nets.

So if you want to fool, say, a facial recognition system, but you don’t know what neural net it uses, you can just deploy one of these patterns and expect that you’ll likely trick it.

It also tells us more about what is going on under the hood of these neural networks. Understanding why these universal patterns work can help us build better neural networks. The researchers think that the explanation might be because there’s a correlation between different regions of the decision boundary, so the geometry of the decision boundary is what they plan to research in the future.