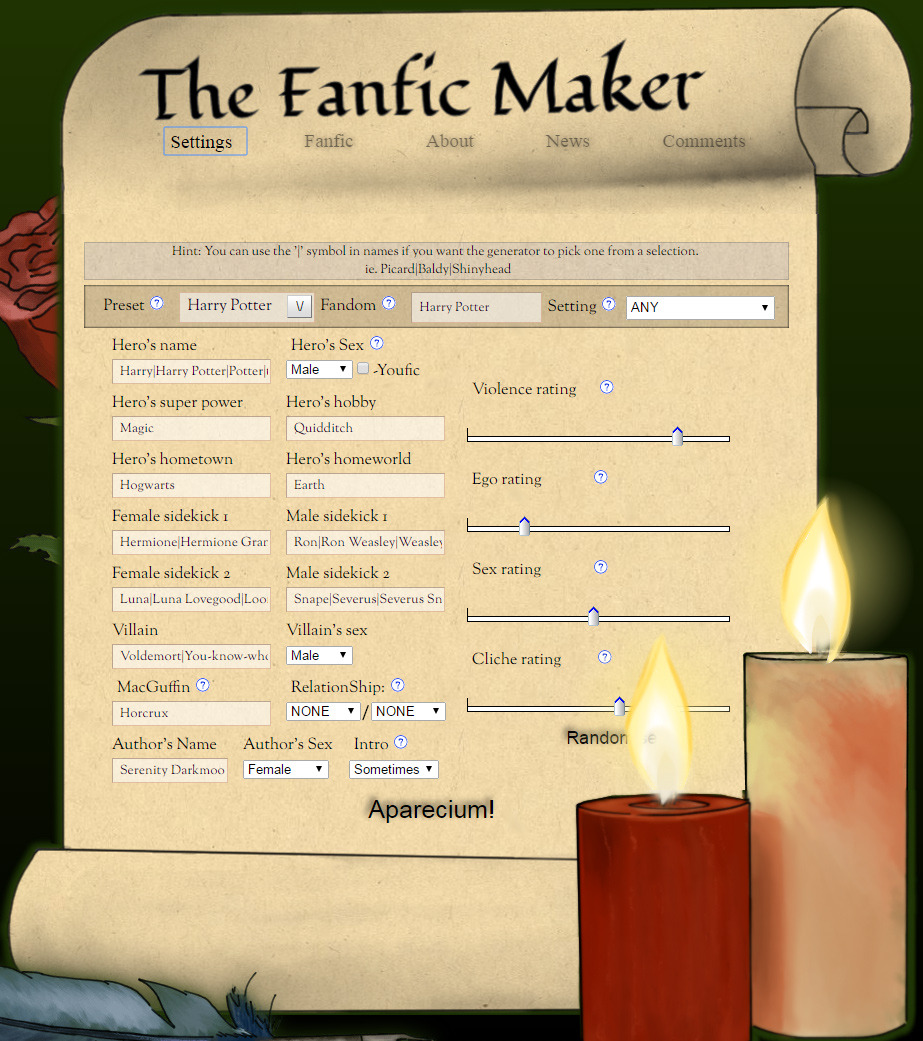

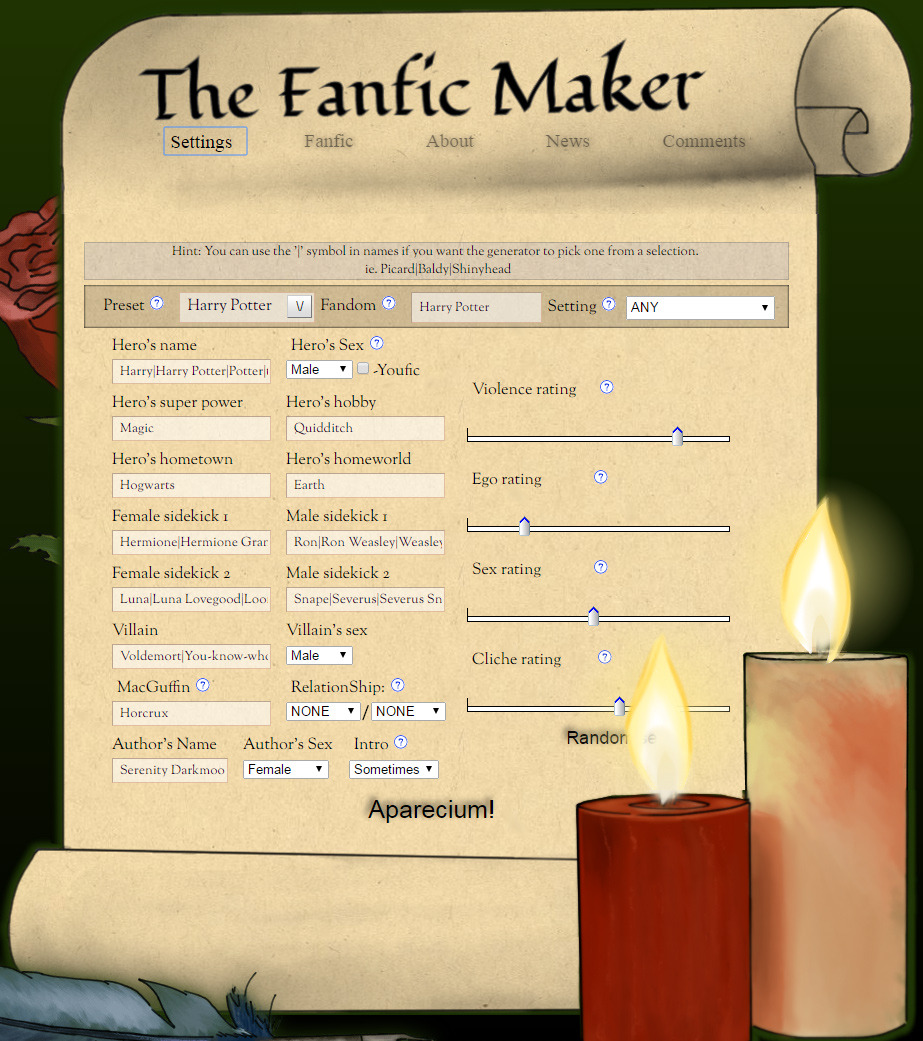

The Fanfic Maker

Choosing a framing for a story-generator can have a lot of impact on how it is received. In the case of The Fanfic Maker, the frame is that it makes terrible fan fiction.

The stories it generates are not anywhere near as well-written or sophisticated as A Time For Destiny. Instead of aiming for an overindulgent but cohesive result, it is a generator of intentionally bad writing. On, in their words, “A site to generate awefull stories automatically.”

The responsible parties for this go by the name of Lost Again, a small group of art-game and educational-game makers.

The advantage of aiming low is that it’s much easier to hit your target. This should not be underestimated: giving an AI an excuse helps set expectations and avoid the Eliza effect that happens when we expect too much. That’s why the “Eugene Goostman” bot pretended to be a 13-year-old Ukrainian boy, and why the 140-character limitation on Twitter bots is the kind of creative constraint that lead to an explosion of bots being created.

Working within the limitations of a medium can create a much stronger result.

As

Matthew Weise has pointed out, many of the approaches that have been developed for delivering narrative in videogames stem from the developers at Looking Glass Studios being dissatisfied with the way conversations weren’t very immersive in Ultima Underworld. Thus, for System Shock they invented the audio log. For Thief, you’re hiding and eavesdropping: you have very good reasons not to participate in the conversations. Influenced by these and other innovations (particularly Half-Life) the present-day approach to telling a story with a game gradually developed by embracing the limitations of the interface.

While nowadays I believe that NaNoGenMo demonstrates that we can aim higher in our procedurally generated stories, it’s still a rhetorically powerful move to embrace a constraint. Constraints create structure.

Thus, the meta-joke that The Fanfic Maker runs on. It certainly doesn’t rise to the heights of the best fan fiction. Mostly because it deliberately turned and ran in the other direction, screaming loudly.

http://fanficmaker.com/