On Endings, Pacing, and Length (and No Man’s Sky)

Games don’t end when the game says “The End”. Games end when the player stops.

Granted, the two often happen at the same point in time, but I’d venture to say that for the majority of games that isn’t the case.

Sometimes players get bored or distracted and stop before the formal end. That doesn’t mean that it’s a bad game: I’ve never actually finished Skyrim’s main quest, but I’ve spent a lot of time in it.

On the flipside, some games you finish playing and then immediately jump back in and play again. Competitive games, like Chess or Team Fortress 2 are obviously structured this way, but it can apply to any game you enjoy enough to play again. The existence of things like New Game Plus is merely a formal, mechanical recognition that we often play games we like multiple times.

In procedurally generated games “the end” is often even less meaningful than usual. (Minecraft literally has a place called “The End” which doesn’t actually end very much.) But that doesn’t mean they don’t have endings, it just means that the player decides what the ending is.

If this sounds like a harder thing to design, that’s because it is.

Alexis Kennedy’s discussion of endings is relevant here. How Fallen London handles endings is instructive, particularly the problem of giving closure in a story game that’s so far continued indefinitely.

Fixed dramatic forms have the advantage of spending a few thousand years learning how to generate catharsis and closure. There’s a lot we can borrow from them, particularly the less-fixed forms such as theater, as Kentucky Route Zero does so well. But there’s still a lot of possibilities left to uncover.

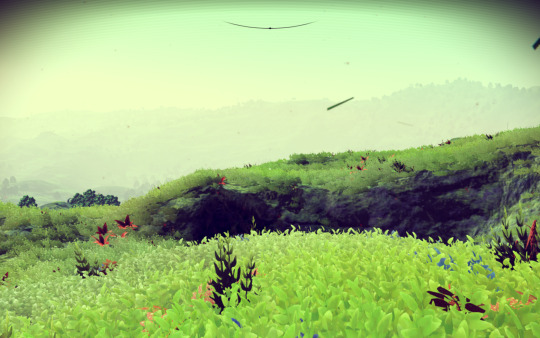

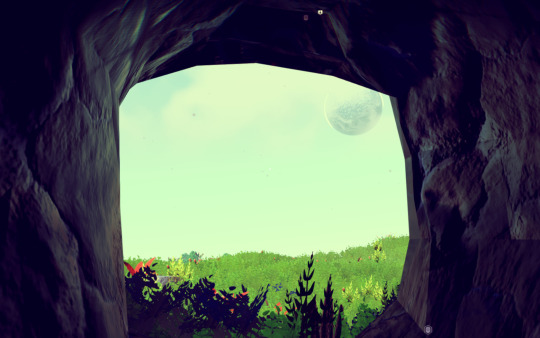

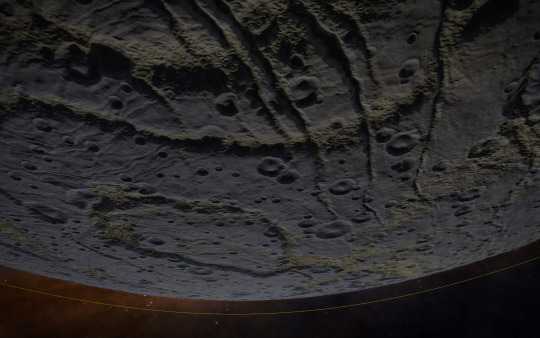

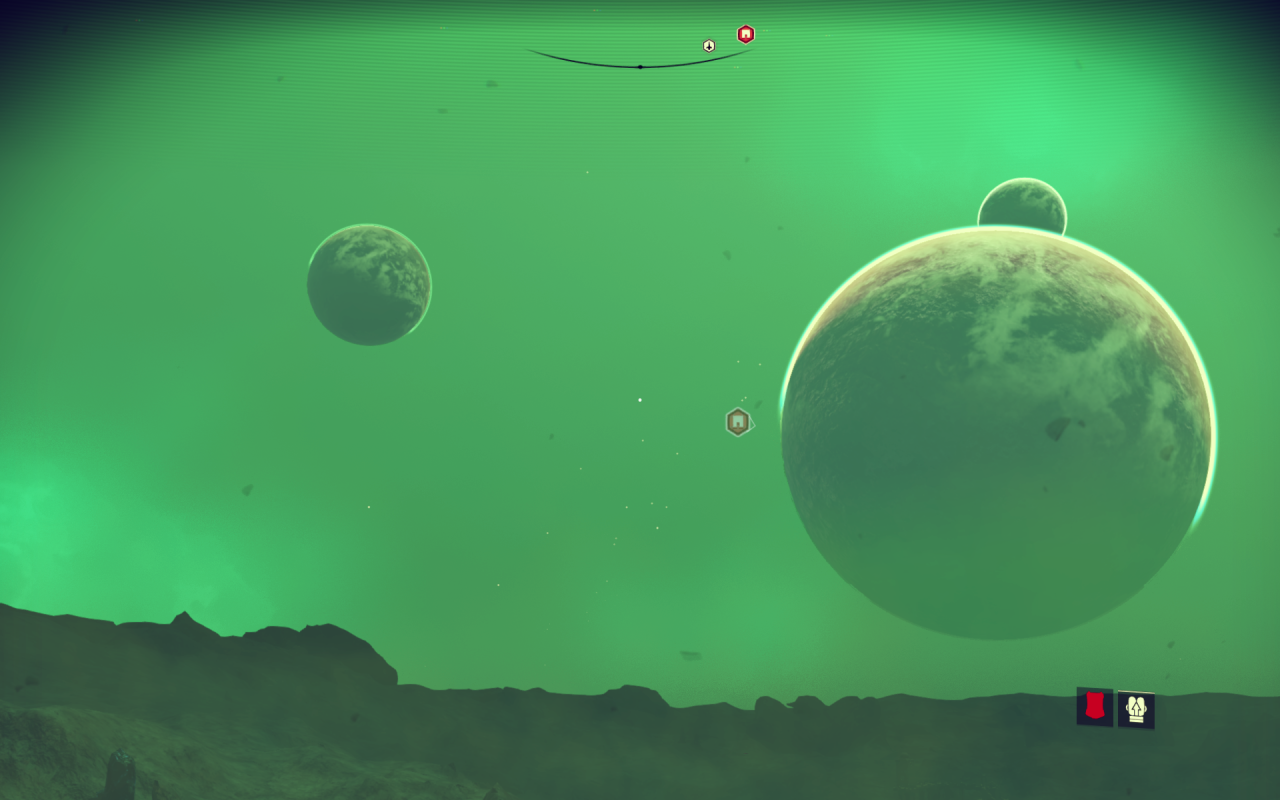

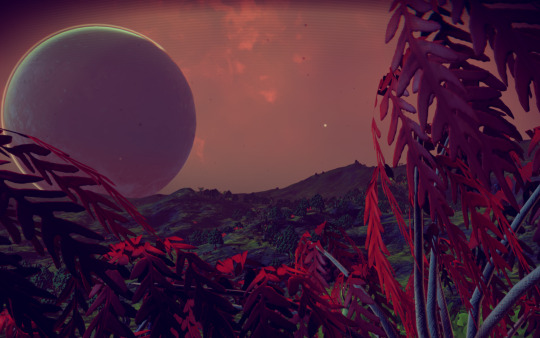

No Man’s Sky, of course, has the center of the galaxy as it’s ostensible goal. But, like most procedurally generated gameworlds of indefinite size, the real constraints come from the player. No Man’s Sky is big, but it will only last as long as you keep wanting to play it.

No Man’s Sky doesn’t have 18-quintillion planets. It has however many planets you end up visiting before you decide to stop.

The other planets still matter, since the game itself will never tell you to stop. You can keep on going for the rest of your life, if you like. But, as Borges described, the effect of contemplating the Library of Babel is akin to meditating on infinity. Read the generated Library of Babel long enough, and you start to experience some of the emotions described in the short story.

Pacing

Pacing in film is dictated by the tempo of the shots and edits. Pacing, in a game, deals with the timing of interactions and discovery of new content to dictate the heartbeat of the player’s engagement.

But how do you design the pacing for a game that’s longer than the player’s lifespan?

Most current videogames consist of an emergent system with a progression on top of it, though other nestings of the two aspects are possible. SimCity is a good example: there’s the emergent simulation of the city, the progression of new things to build (gated by money or population), and the emergent problems that occur as your city expands and your original traffic plans no longer work in a larger metropolis.

No Man’s Sky has the progression of unlocking new blueprints, the Atlas and getting to the center, getting a bigger ship, and so on, but that’s acting in parallel to the emergent discovery of the next new surprising planet. This makes designing the pacing a weird, dynamic problem, more so than for other kinds of games.

Pacing both halves of No Man’s Sky is tricky. I suspect that most players are going to stop when they feel like they’ve seen enough, rather than when they reach the end of the progression paths. That’s not much of a leap, though: for a given game, only a few will ever complete it. The idea of beating a game may be embedded in gamer culture, but I believe it was always a myth, in both senses. Beating a game is a story we tell ourselves about the kind of gamer we think we’re supposed to be.

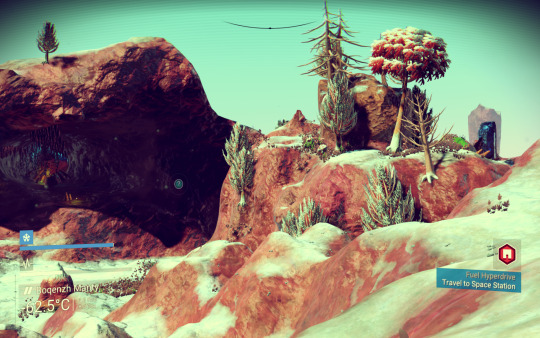

But it’s also because the two halves of No Man’s Sky are so disconnected. You won’t run out of planets, but you’ll start seeing already-known blueprints pretty quickly.

If there is more variation in the buildings or the items deeper into the game than I’ve yet gone, I suspect most people will stop long before they discover them. Pacing the static progression content is hard, because it’ll never match the procedural generation. Either you make it fast to keep a reasonable rate of reward (and walking across a planet is already at a contemplative pace) or you make it much slower to stretch it out.

I wish they would have included some parallel procedurally generated content systems, like the weapon generation in Borderlands or Galactic Arms Race. That would have given another dimension to the exploration: repetitious actions are more forgivable in the context of random rewards.

But of course that would make the overall balance even tricker than now, with some people having amazing games, others frustrating, and others boring, in unpredictable ways. Not to mention the extra development time, or the risk for procedurally generated loot to spit out dominant choices that eliminate whole swaths of gameplay. It’s not something you can just drop in on a weekend.

If nothing else, No Man’s Sky has given me a lot to contemplate. As Alexis Kennedy said, players want closure and continuity. How do you do that in a game that has no defined end?

As for the pacing, try it as a design exercise: how would you deal with pacing the content? And do it with the same or fewer development resources: no magic content fairy for you.

Even for linear, narrative-progression-driven games, this complexity is worth keeping in mind. Every game is larger than its progression system.