Reflectance Modeling by Neural Texture Synthesis

It’s that time of year again: SIGGRAPH was last week, giving us a ton of new computer graphics research to be astounded by.

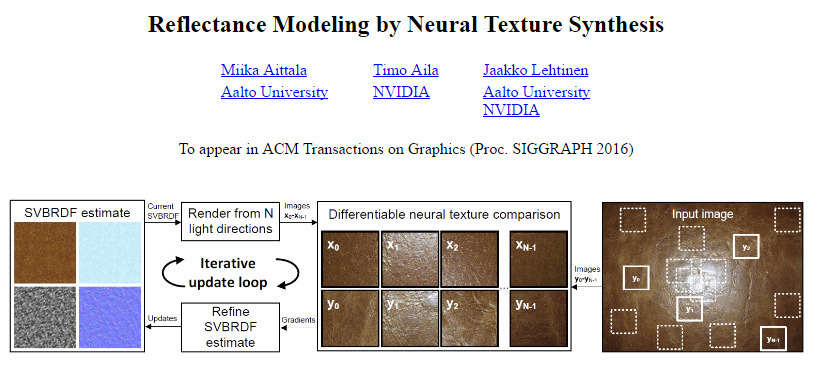

Like this research by Miika Aittala, Timo Alia, and Jaakko Lehtinen: using a single image, a neural network can use parametric texture synthesis to model the reflectance of textures.

If you’re not a computer graphics artist, you might be wondering what the big deal is, so here’s what’s going on:

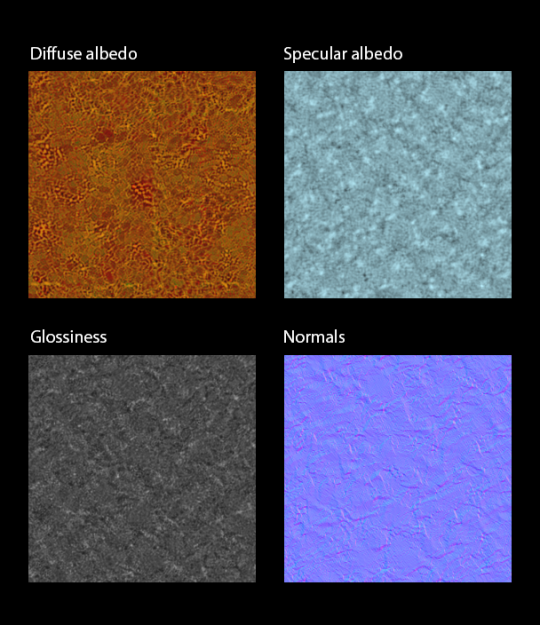

Describing a material to be rendered involves both the color and the way that it reflects the light. There’s a bunch of ways to approach this, but one common way is to break the texture into its albedo, the basic diffuse color; specularity, the degree to which it reflects its surrounding environment; and glossiness, how mirror-like or scattered that reflection is.

Typically, when you try to photograph something to use for a texture, you want to try to get a photo with the lighting as flat as possible. Any reflections or shadows won’t match the lighting you later add, so the photo needs to have little of it as possible. This is difficult, and the work it takes to get a clean diffuse map and the matching reflection data means that it’s often faster to create the textures from scratch or procedurally generate them.

This is difficult, and the work it takes to get a clean diffuse map and the matching reflection data means that it’s often faster to paint the textures from scratch or procedurally generate them.

An image like this one would be terrible for the usual approach, because of the giant hotspot of a reflection in the middle:

But with this research, that actually helps. Feed this single image into a texture synthesis neural network, and it uses the difference in reflection to learn the optical properties of the material. So you can turn that into this:

Which I think is both a clever idea and a pretty convincing result. I hope this is the kind of thing that will feature prominently in future artistic tools, doing the heavy lifting so we can focus on the image-creation.

https://mediatech.aalto.fi/publications/graphics/NeuralSVBRDF/