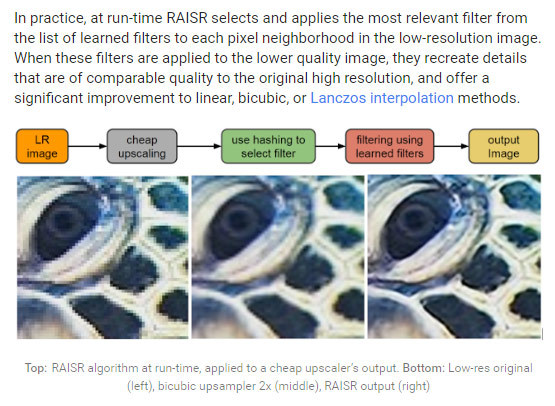

RAISR - Image Upsampling via Machine Learning

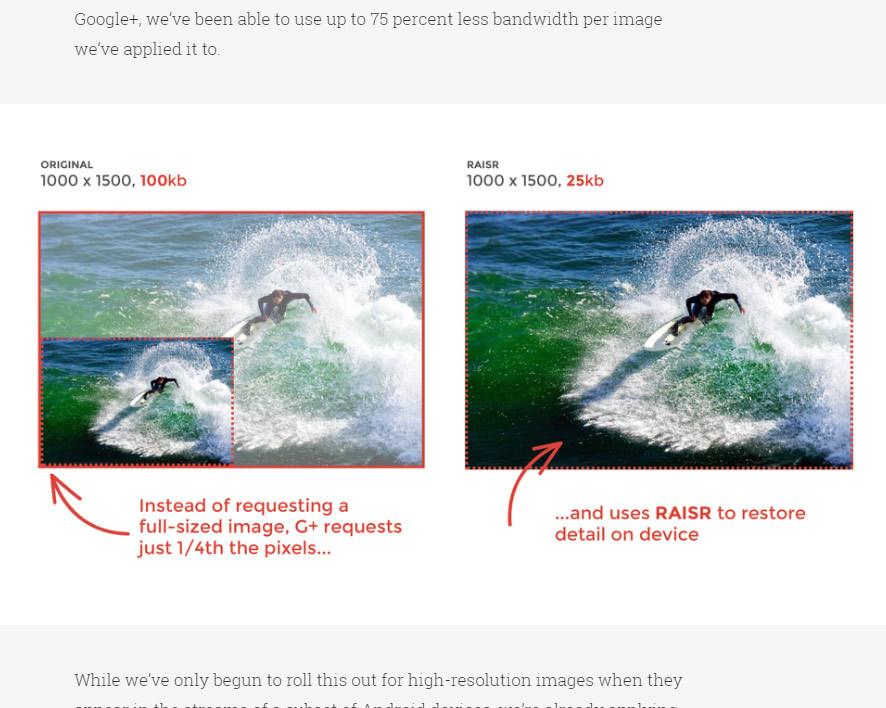

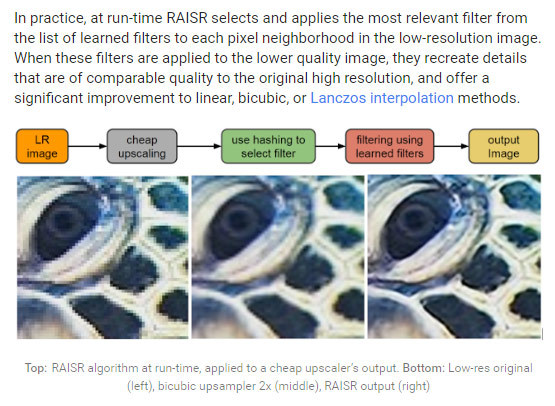

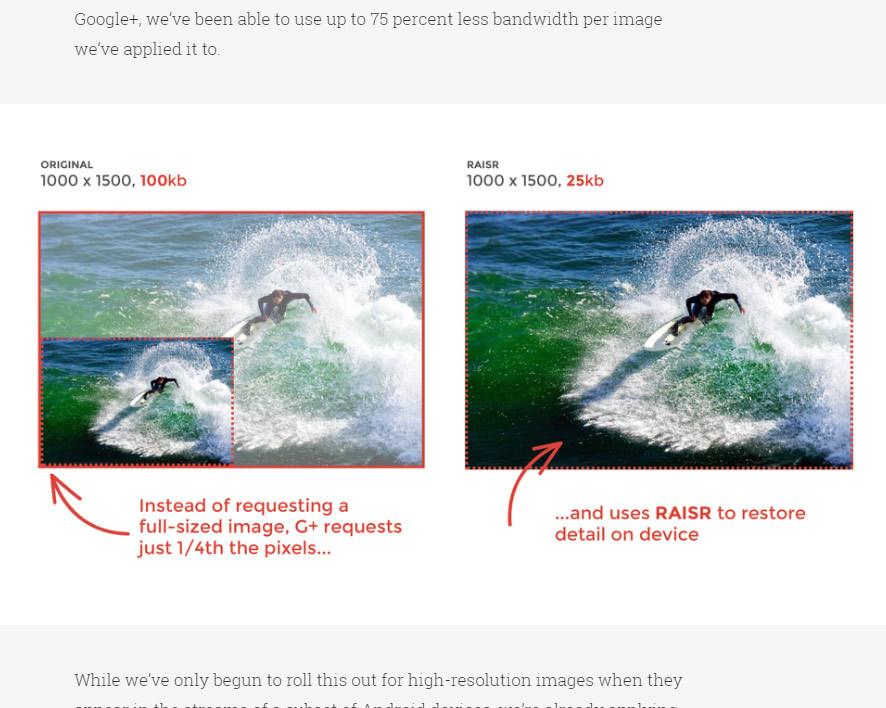

Google has started rolling out a machine-learning-based upsampling technique. Instead of downloading a full-sized image, Google+ on Android devices will be able to download a small image and then scale it up on the device.

(I think Alex Champandard predicted this would be coming soon, but this is really soon.)

This obviously saves a lot of bandwidth: a 2x upsample gets you the same image with a quarter of the bandwidth.

This is pretty amazing, for a lot of reasons, and I expect that tech like this will be incorporated into a lot of future production techniques. Why download the entire thing when the upsampled version is 99% identical? Rendering larger images will mean rendering a half-sized version and scaling up. Once you have a real-time solution, expect 4K videogames to get a big boost in rendering speed.

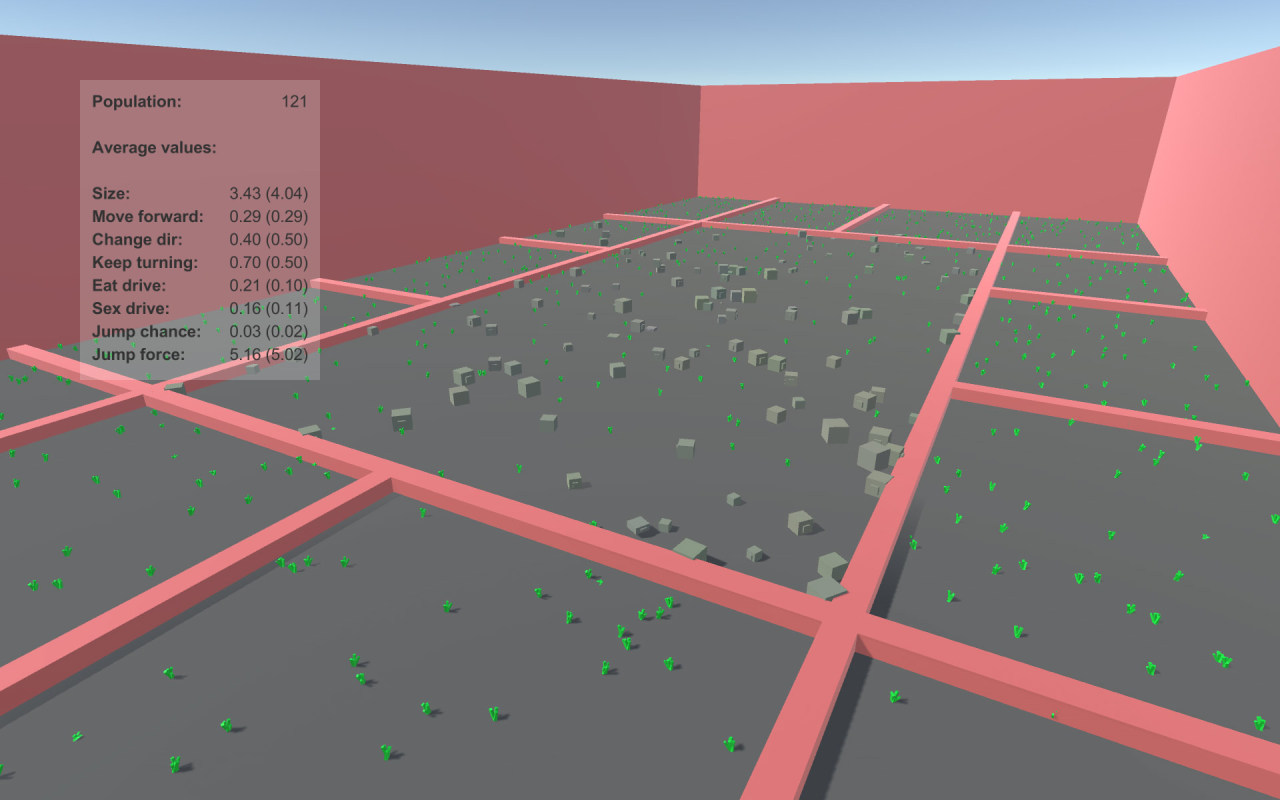

Of course, I’ve been immersed in this stuff for a while now. (In the other window I have open, I have a bunch of images I’m using to train Neural Enhance on paintings.) So I feel obligated to point out that there will also be downsides: upsampled images, by definition, will have less information.

Since the whole idea is to throw away information that doesn’t matter to human perception, this won’t be an issue for the average photo. But if you are, say, looking for a source image for visual effects or scientific research, that will be a big problem. You probably shouldn’t store your original photos in this format, though you may not have a choice if your camera makes the decision for you.

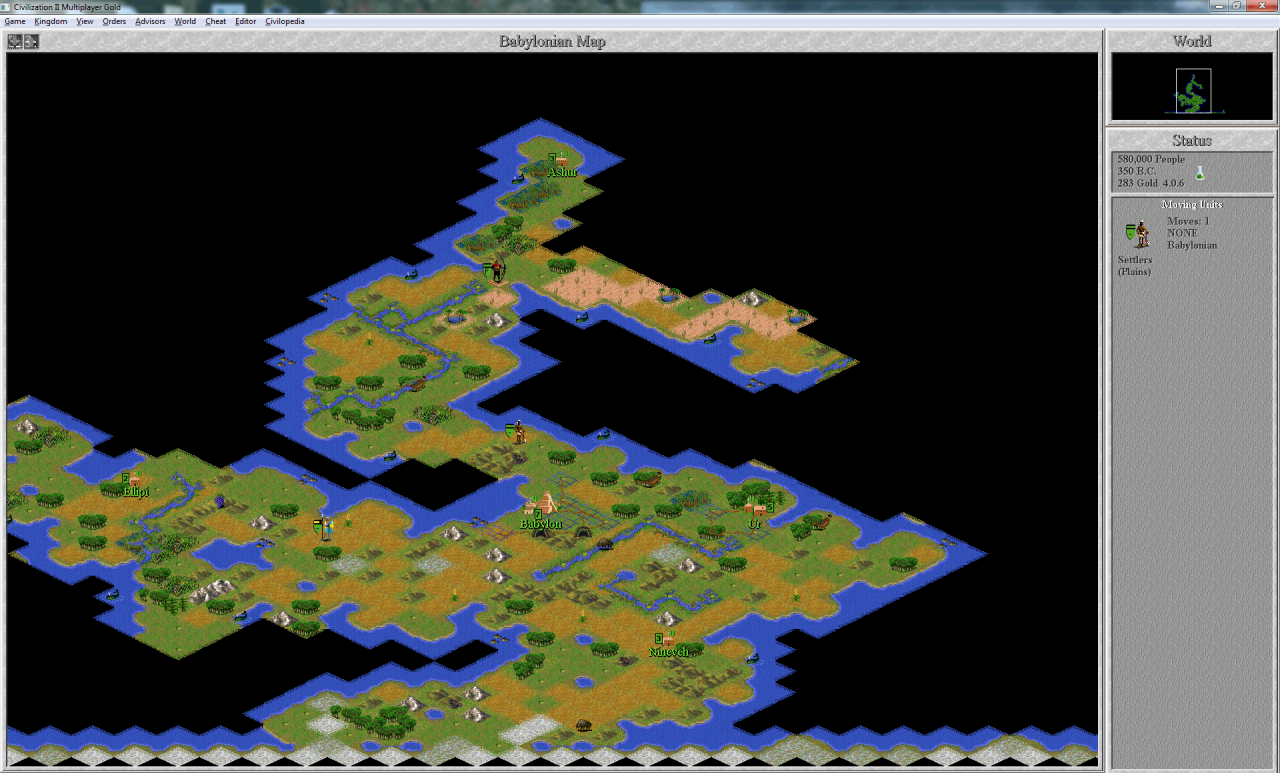

And, of course, what is irrelevant data depends on the purpose of the photo: recall that Xerox scanners had a mode that altered numbers in scanned documents. The documents looked almost the same, since a 1 and a 2 don’t look all that different from a distance, but the meaning was vastly different. Image upsampling has similar pitfalls: since it’s based on estimating what data looks right, the result might erase differences that looked similar but meant something different.

Of course, this is just one more way for photographs to mislead us, along with framing, staging, and the whole panoply of ways that photographs fail to be objective. But this is a manipulation that is invisible to us, unthinking alterations tirelessly enacted by our machine servants. What you see may not be what was there.

Still, it’s an impressive achievement, one that just a few years ago would have seemed impossible.