Gearhead: Arena & Gearhead 2

It’s been a while since I talked about the Gearhead mecha roguelike series.

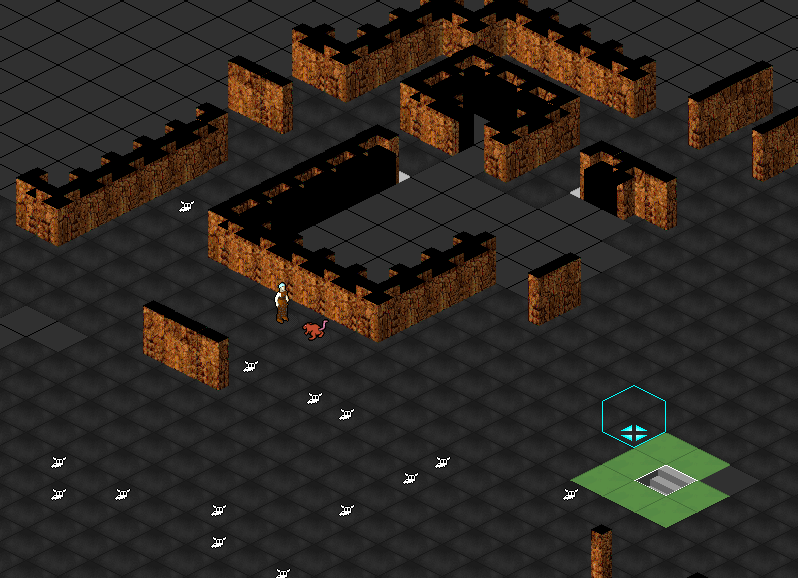

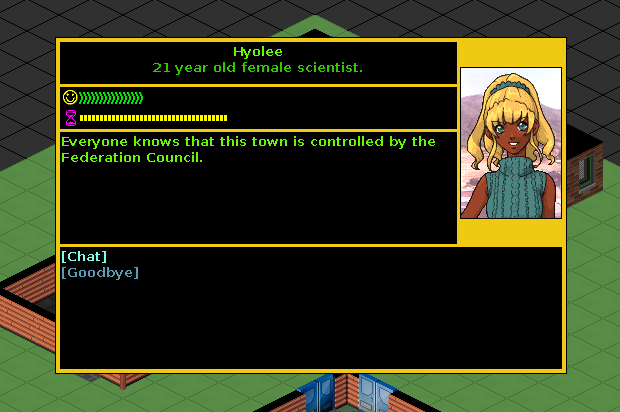

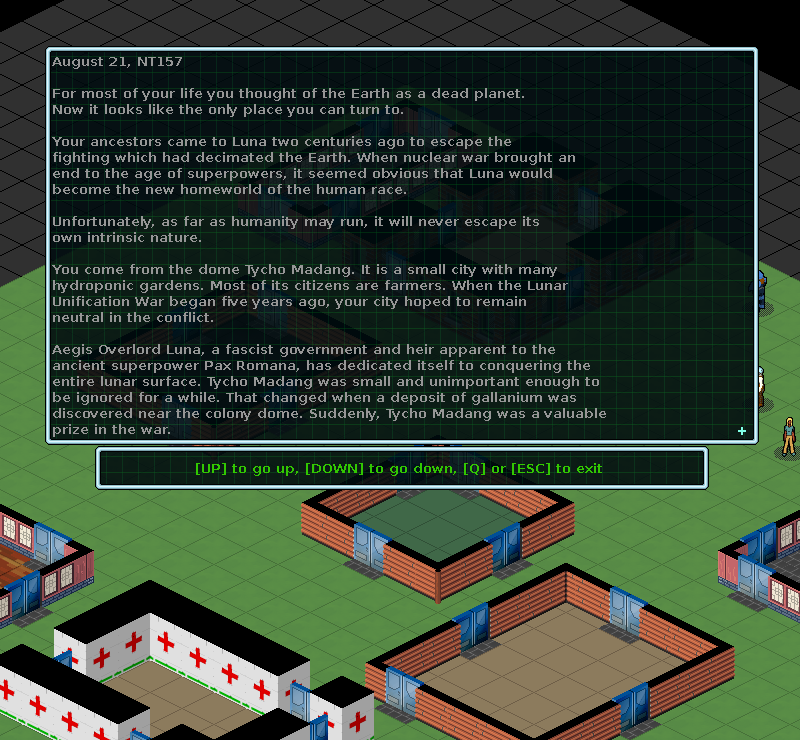

In the meantime, the site has been revised, the source code has been migrated to GitHub, and you can find the games on itch.io. Gearhead has some interesting innovations in the roguelike space: you can pilot giant mecha; there’s an ongoing, generated plot that’s different each game; and it uses randomly-generated conversation responses to fill out its dialogue system.

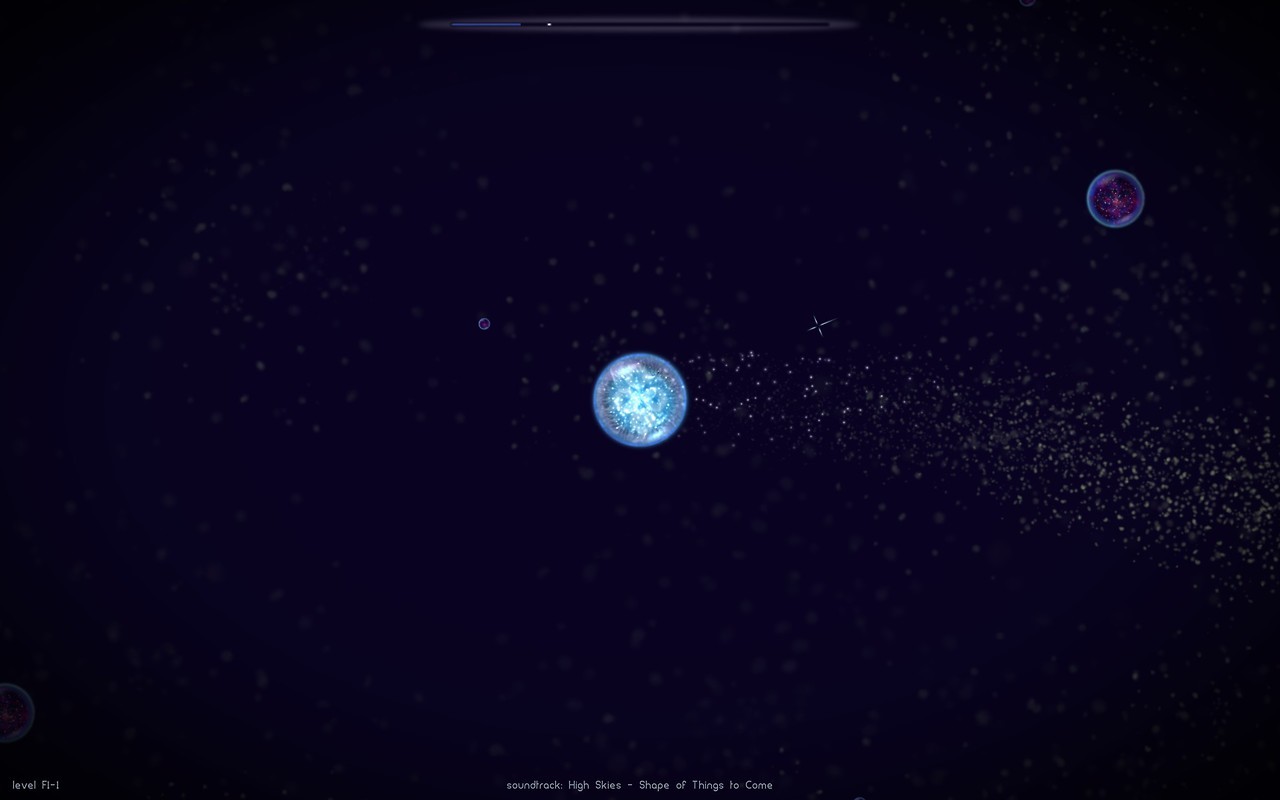

https://gearhead.itch.io/gearhead-arena

Most interestingly, Joseph Hewett has published some notes on the random story generation in Gearhead. The generator uses a “big list of story fragments” and an algorithm for arranging them. According to him, this approach was inspired by reader response criticism, Scott McCloud’s explanation of closure, and Propp’s narrative functions. Each story chunk (called a “Plot”) is placed in a context of three variables: Enemy, Mystery, and Misfortune. These context variables dictate which Plots can be picked when the algorithm needs a new core story Plot.

Joseph points out that many different methods could be used to arrange these Plot chunks, from simple Markov chains to more complex methods. (You could, for example, use Tracery if you feed the plots into it as strings.)