Deep Image Matting

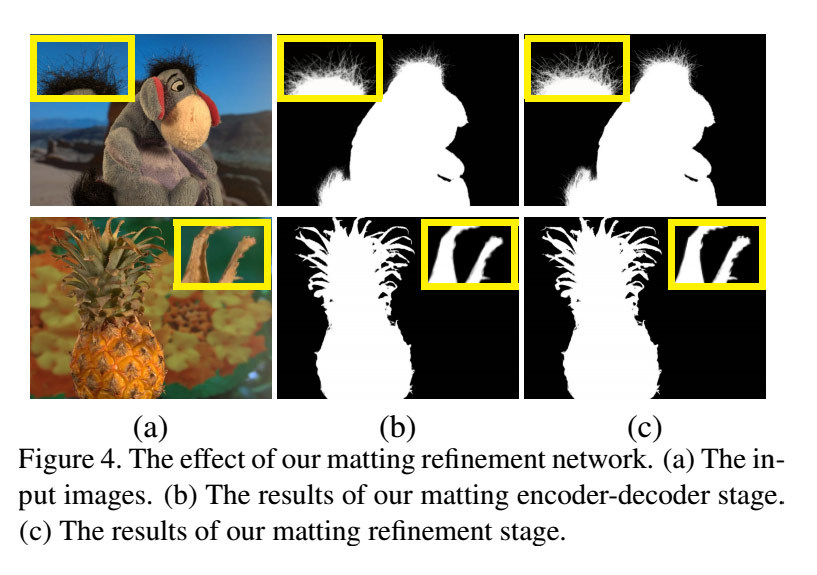

While I’m talking about neural networks, I should point out this research, which use deep learning to extract better mattes for distinguishing foreground and background objects.

Matting is an old, old problem in visual effects, right back to the very earliest trick pictures. Being able to combine two images into one–or conversely, to remove part of an image to make the combination possible–is fundamental to VFX. Early tricks to achieve it included putting a matte on a glass plane in front of the subject, or blocking off part of the shot so that it wouldn’t be exposed. Techniques such as bluescreen made it easier, but even today separating the foreground and background can be difficult and expensive. Especially for hair.

Existing techniques use color information: bluescreen works by removing all of the blue from an image, so in compositing you just throw away anything that’s too blue. In practice, you have to compensate for color spill, where the background colors bleed onto the actors in the foreground. There’s many ways of dealing with this, but fine details such as hair or fur remain difficult to process.

Enter this technique: instead of using the color information, it trains a neural net to look for the structures that mark the boundaries before foreground and background. Together with a large dataset of example objects, it produces rather effective results, even on images with no clear color separation.