CycleGAN

One big drawback of previous style-transfer methods was that you needed to train the network on image pairs. In order to figure out the similarities you’d need something like a photo and a painting of a photo. Unfortunately, there aren’t many examples of that in the wild. Things like semantic annotations helped, and there have been attempts with automated processes, but this was a general limitation.

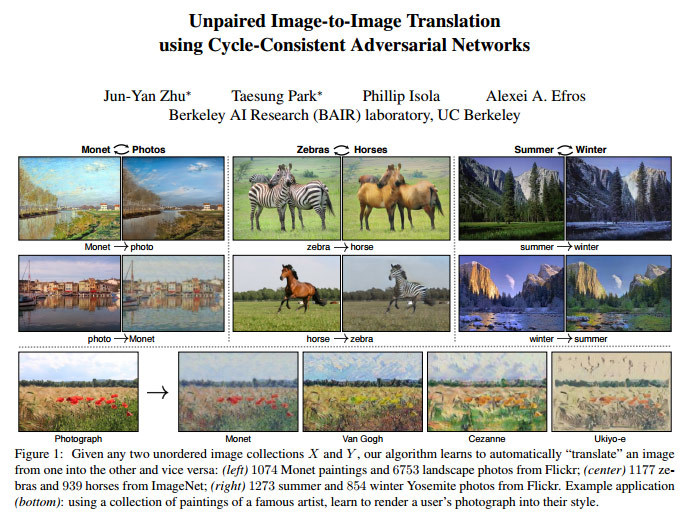

As you might guess, that’s not true anymore. Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros,, the same team who brought us pix2pix have come up with a way to do the training without paired images.

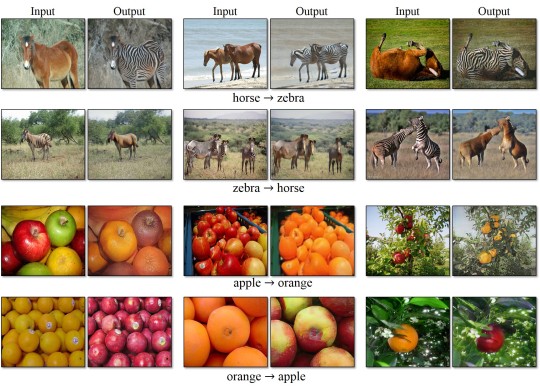

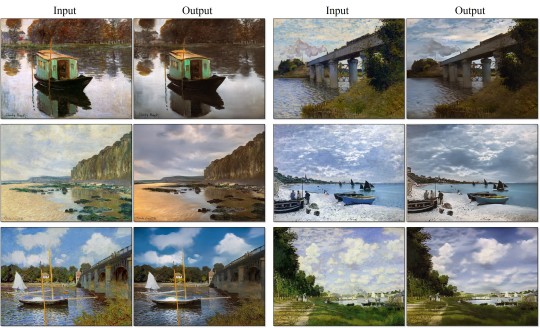

The results are, well, pretty good:

Remember, the one of the limitations of things like edges2cats is that the edges were created with an automated process that missed a lot of details or highlighted irrelevant ones. Being able to use completely separate datasets for the training opens up a host of new options.