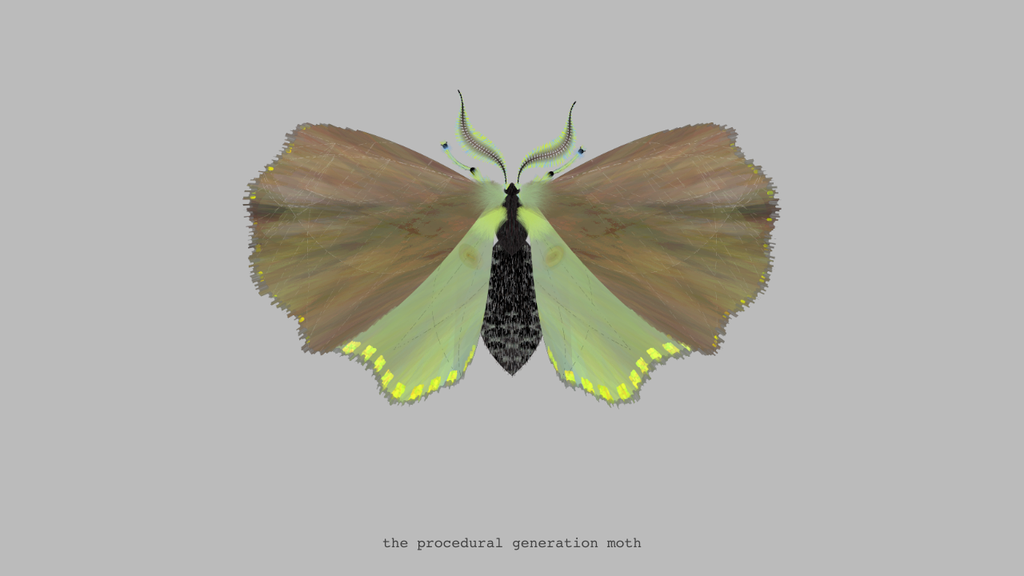

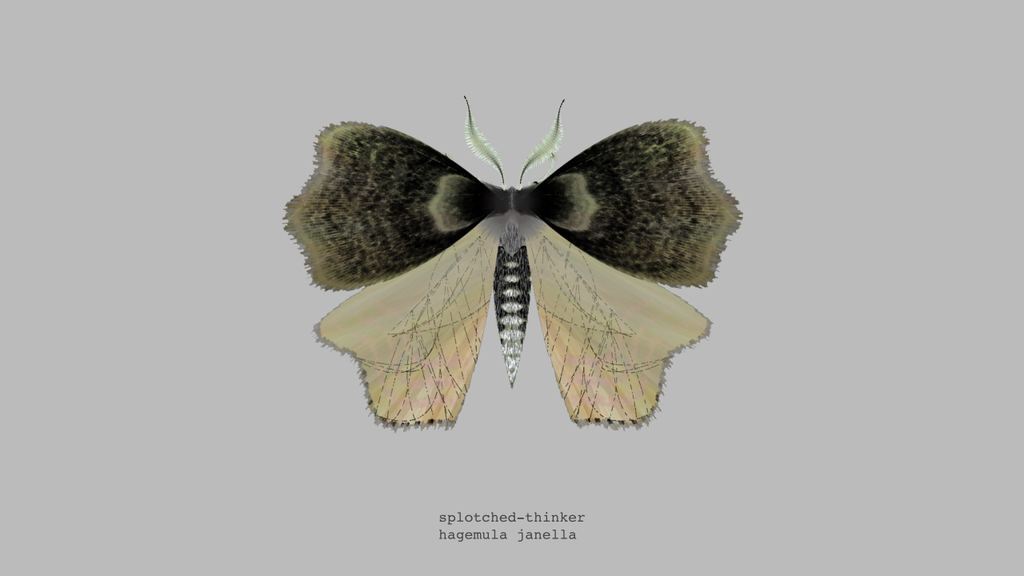

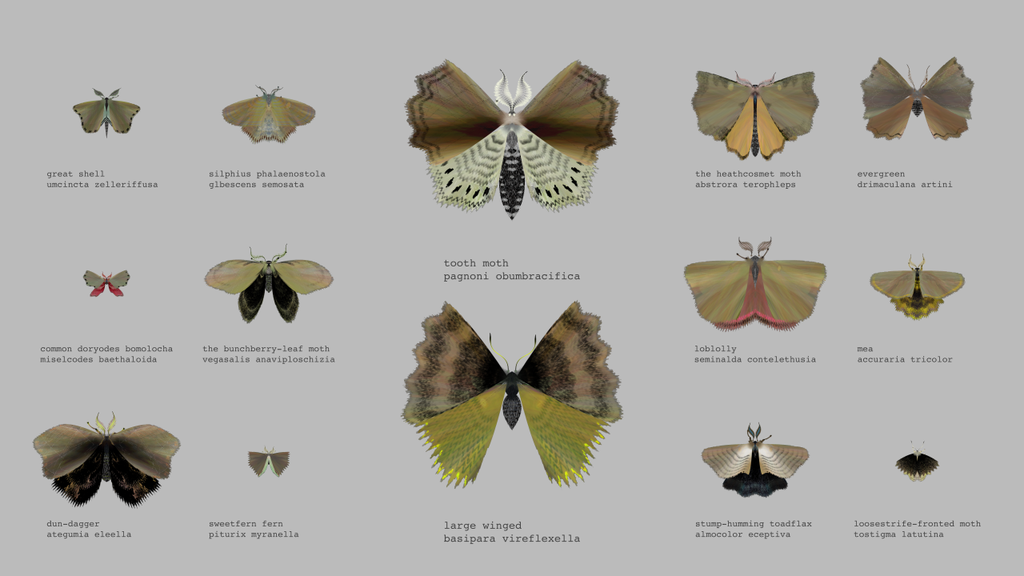

Substrate (2003)

Shortly after the turn of the century, there was a new programming environment called Processing that was uniquely tailored to producing visualizations and generative art. Jared Tarbell was one of the artists who discovered it early. Jared had previously done many interactive generative artworks in Flash, releasing them as open source. He did the same with his Processing projects, including the one you see above: Substrate.

Substrate is, I think, one of his most significant works. It’s pretty simple: lines growing out of other lines, forming crystalline patterns. The result is something that appears complex: city streets in endless configurations. It’s no wonder it was one of Jared’s most commonly mentioned works at the time.

Speaking of his work as a whole, he wrote:

I write computer programs to create graphic images.

With an algorithmic goal in mind, I manipulate the work by finely crafting the semantics of each program. Specific results are pursued, although occasionally surprising discoveries are made.

I believe all code is dead unless executing within the computer. For this reason I distribute the source code of my programs in modifiable form. Modifications and extensions of these algorithms are encouraged.

The artist would later go on to help co-found a little website you’ve probably never heard of named Etsy.

Though the embedded Java version of Substrate is difficult to run in a browser (try updating the Java install in Firefox) the project has always been open source, and it runs just fine when ported to the latest version of Processing, as long as you can hunt down the “pollockShimmering.gif” it uses to derive its pallette.

The web page for Substrate on complexification.net has a number of images from different versions, showing the development process and the exploration of possibilities.

I think we can learn three things from Jared Tarbell: first, the algorithms he released. Second, a serendipitous, artistic approach to exploring the possibilities hidden in code. And lastly, that sometimes deep and unexpected results can come from following simple rules.