Plantarium (2016)

Almost-but-not-quite made for ScreensaverJam, Plantarium (not to be confused with Planetarium) is a plant generator by Daniel Linssen.

http://managore.itch.io/plantarium

Almost-but-not-quite made for ScreensaverJam, Plantarium (not to be confused with Planetarium) is a plant generator by Daniel Linssen.

http://managore.itch.io/plantarium

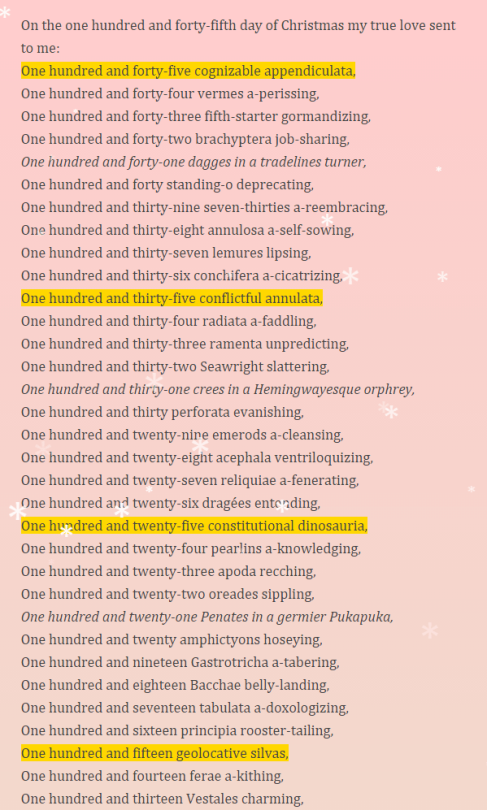

Why should Christmas be limited to twelve days? Drummers drumming and lords a-leaping are alright, but why doesn’t your true love send you twenty-one diurna in a nanomotor salley or one hundred and four quadrumana water-skiing?

Not content with the status quo, for NaNoGenMo 2015 Hugovk wrote this songbook to extrapolate the rest of the song. And then turned it into an audio book.

There’s also a Twitter bot, if you’d like to be reminded of the holiday season all year round: https://twitter.com/MyTruLuvSent2Me

image by Erik Bernhardsson

Erik Bernhardsson had a lot of fonts. Which he decided to feed into a neural network. Which he then used to generate new characters.

The result is pretty interesting. It can do a decent job of guessing what a new character would look like for a font that it’s seen some of the letters for. Because the data is now vectors, it can interpolate between two arbitrary fonts. And it can generate entirely new fonts, as seen in the animation above.

The data and source code are available, if you’d like to play around with it yourself. Erik also wrote a followup post linking to a bunch of other font and character generation and analysis stuff.

http://erikbern.com/2016/01/21/analyzing-50k-fonts-using-deep-neural-networks/

As I’ve noted, travel narratives and guidebooks were a common framing device in 2015′s NaNoGenMo. Emily Short, dame of interactive fiction, contributed her own post-NaNoGenMo generated travel guide to imaginary lands. She approached the project as a collaboration between her and her machine co-writer, playing to the strengths of each.

The text is unusually readable. While you’ll certainly notice repetition, there are enough different patterns at work that you can still find something unexpected in another page or two. The Annals demonstrates multiple layers of organization in both its formal patterns and on an aesthetic, literary level. This is one procedurally generated novel that is interesting to the end.

That’s not the only reason that you should read it. The second half of the work is a discussion of the procedural generation and the principles that went into co-writing with the machine. The organizing elements of Salt, Mushroom, Beeswax, Venom, and Egg are worth studying as both an aesthetic tarot and as common principles for generative works.

If you want to write for procedural generation–which is not quite the same as writing procedural generation, though they overlap–Emily Short’s remarks are particularly valuable. The discussion of Venomous writing, for example, or the Mushroomy nature of Markov chains, or the proper use of Beeswax: all things I found illuminating and inspirational.

The book seems to have inspired a little bit of fanfiction; there’s a subreddit dedicated to the Parrigues and a wikia, though they’re both a bit sparse.

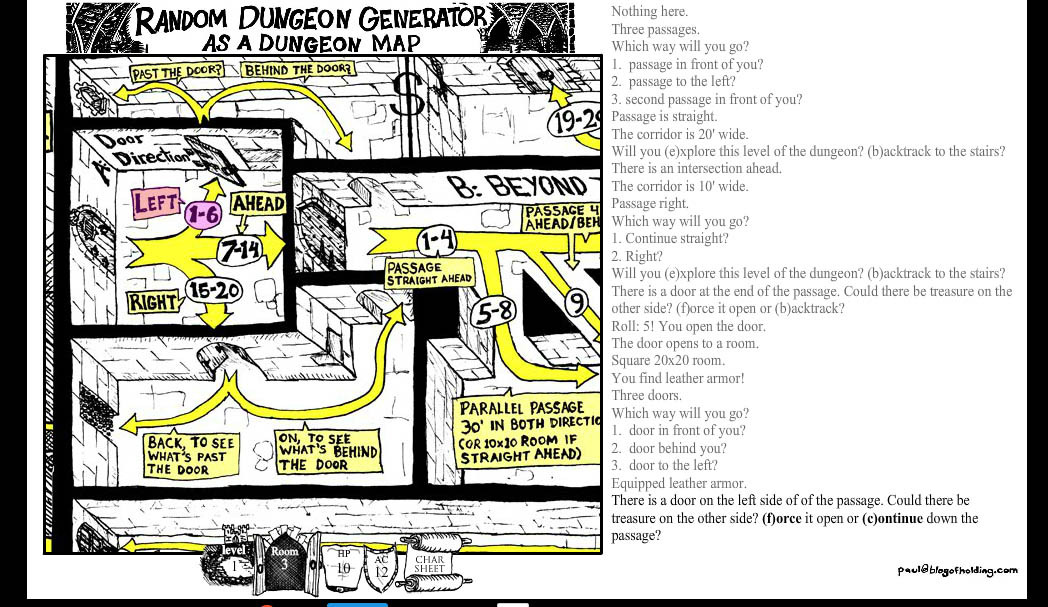

As I’m sure you’ll recall, Dungeons & Dragons has a long history of including dungeon generators for solo adventures. Dungeon Robber is a digital implementation of Gary Gygax’s solo dungeon creation rules. Paul Hughes based it on his poster that recreates the original rules from early D&D.

It’s quite challenging and somewhat arbitrary–very authentic–and has a host of extra features. Including obscure things from the original, such as a rare magic pool that grants wishes. (You’ll want to use your keyboard instead of clicking on the options.)

Play it online here: http://blogofholding.com/dungeonrobber/

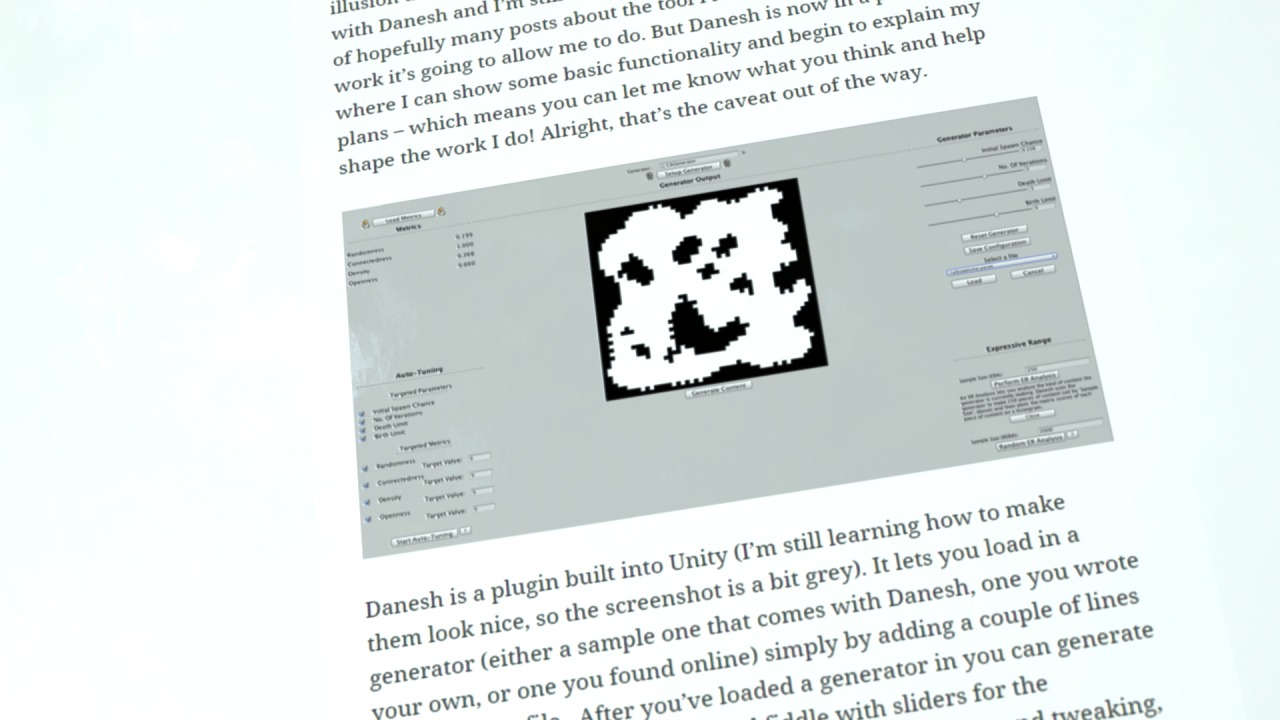

Yesterday, Michael Cook announced his new tool that aims to make it easier to get started with procedural generation.

It helps preview the output of a generator, calculates the expressive range (with Expressive Range Analysis), and can help you find better changes for your code.

It’s not released in the wild yet, but he is looking for testers, so send him an email if you want to help test the software.

http://www.gamesbyangelina.org/2016/02/introducing-danesh-part-1/

A livecoding musical performance by Benoît and the Mandelbrots, a livecoding laptop band from Germany. Their most recent performance was last weekend, at the GLOBALE: Performing Sound, Playing Technology festival, but this video is from a January 2015 performance.

They used SuperCollider for this performance, which is “a programming language for real time audio synthesis and algorithmic composition”.

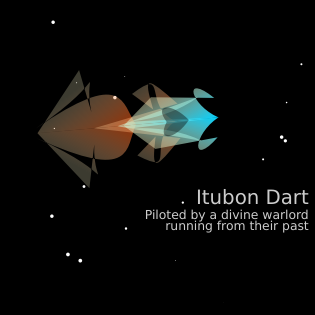

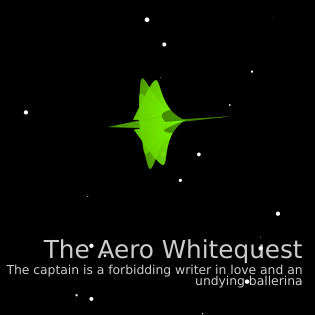

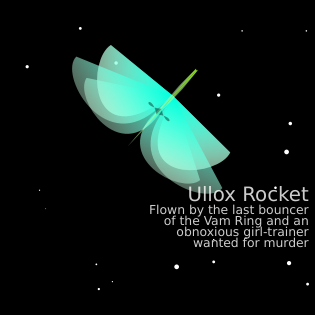

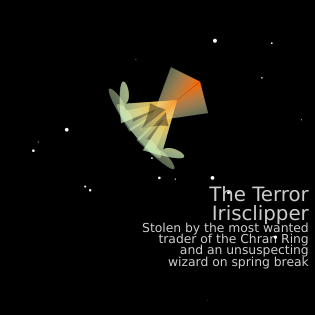

What I love about these exquisite little ships from Kate Compton’s Tiny Space Adventures is the way that each one manages to evoke a story.

Like many of the best generators of this sort of vignette, they make me want to read fan fiction about the adventures that prompted these circumstances. Why is the divine warlord running from their past? Where are the magma-princesses going? Why did the foul-mouthed cortesian commandeer the Dravippex? Who broke the sapient bouncer’s heart? What’s the wizard going to do when they discover their spring break ship has been stolen?

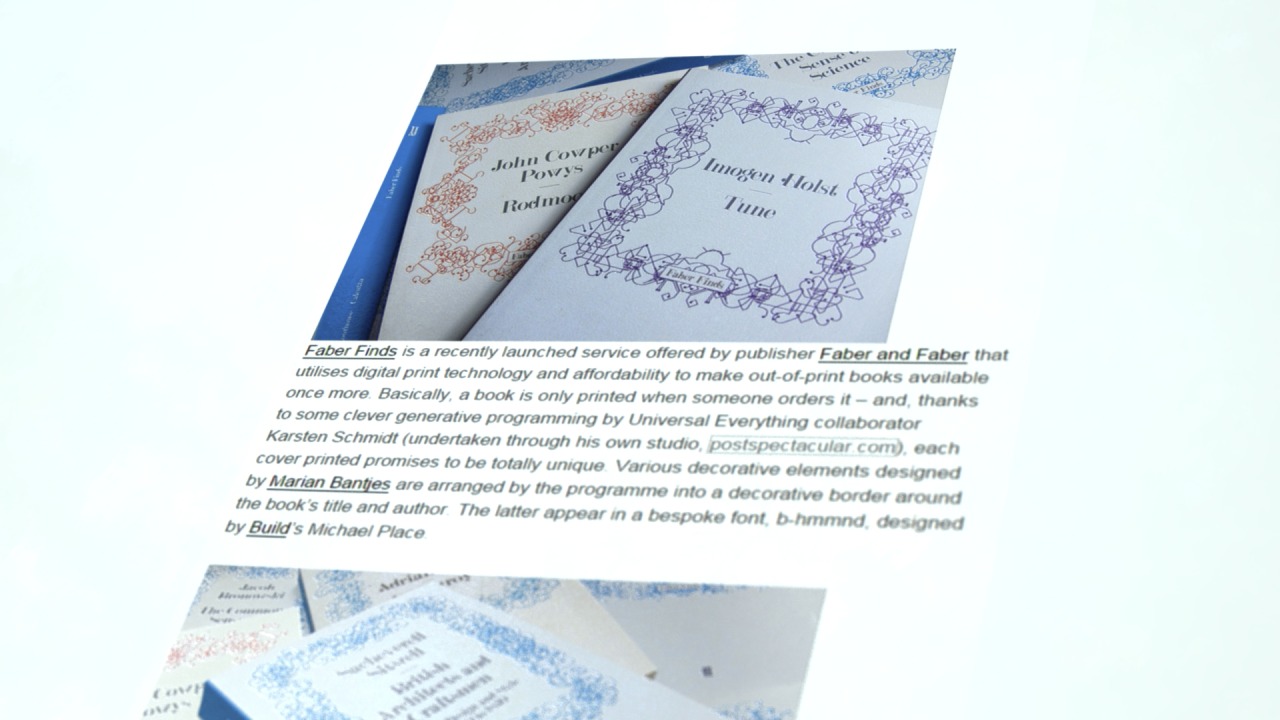

Here’s a project from 2008 that I just learned about: a publisher set up a service to bring back out-of-print books on demand. They commissioned Karsten Schmidt to build a “design machine” to generativity create covers for the books on demand, based on elements created by Marian Bantjes.

Instead of art-directing a specific concept, they build software that generalized the rules behind the artistic choices. The system has an order to it that reflects the different genres of books and the constraints of the design, while still making each book completely unique.

The final system works on the fly to generate a cover only when it needs to be displayed or printed. In theory, every cover printed can be completely unique, which is one way that generative processes are leading us towards a post-mass-production age.

Industrial production at scale used to mean that everything had to be identical, and the humans had to be shoved into the identical fit. Now we can build machines that are custom-tailored to fit the humans. Because they enable flexibility, generative processes have the capability to be more humanizing than more traditional, rigid approaches.

A more generative future is one that is more capable of adjusting to differences and disabilities and meeting humans as they are, not as they’re cut down to average size to fit into a machine.

You can read more details about the system at: http://www.creativereview.co.uk/cr-blog/2008/july/faber-finds-generative-book-covers/

There’s a lot of procedural generation going on in XCOM 2, but it’ll take me a while to see enough of it to have something interesting to say. However, there is one aspect of the game that, while not strictly procedural generation, is closely related: the automated camera.

Just like text generation has ways to vary the text, there are also ways to vary the visuals. Dynamic lighting is one way: Elite Dangerous gets a lot of mileage out of varying the lighting according to the nearby stars. But it has its drawbacks: varying color is less noticeable than varying silhouette.

A dynamic camera, on the other hand, can significantly change how a scene looks, even if the lighting and animation is identical. Different framing can dramatically change how a shot feels. They also allow storytelling to peek beyond the immediate player character. A dynamic camera showing different parts of the simulation was often included in flight simulators like F-15 II and European Air War.

There’s been a lot of research into automated shot composition and editing (some examples: cinematographic user models, tracking camera, automated editing). Designing an AI to handle virtual cinematography is tricky because it not only has to handle the usual 3D camera problems (like not going inside walls) but it also has to pick the best angles on its own.

For the previous game, the dynamic closeup cameras were a combination of procedural scripting and an artist-influenced camera-cage around the characters. XCOM 2 doubles down on this, with, from what I can tell so far, a greater variety of shots. Diving into closeup mode when a soldier smashes through a window, dashes across a room under fire, or lines up a shot on that nasty alien helps get you emotionally closer to the action. Not to mention, just like in the first game, seeing your soldiers visible emotional reactions helps humanize the game and connect you to their stories.

And then you get ambushed by aliens and half the squad dies, because XCOM is, at its heart, a horror game where you care about the characters getting stalked by the unrelenting monsters.