A Mighty Host

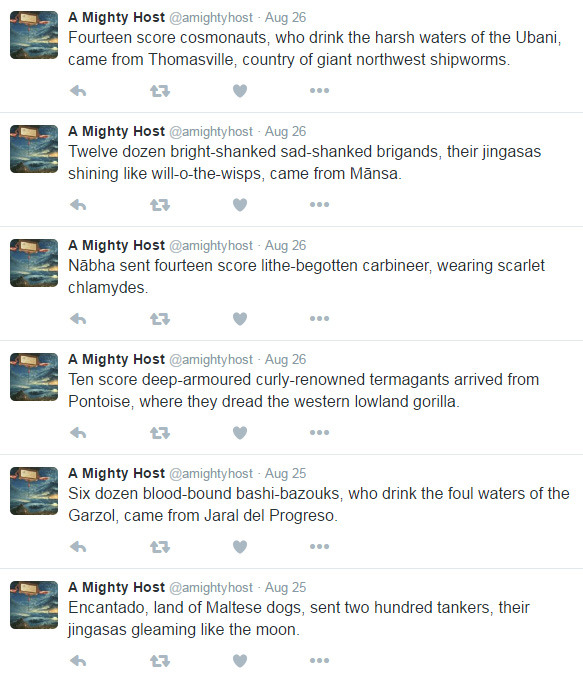

This bot, by Mike Lynch describes new arrivals to the Trojan war. Inspired by Umberto Eco’s musings on the catalog of ships in the Iliad, the Haskell-based generator uses data sources like WordNet, NLTK, and Wikipedia, and invents new allies for the fleet:

Sporting raw sienna hijabs, two hundred iron-shouldered flat-greaved diggers arrived from Sokółka, home of white wolves.

Badr Ḩunayn, realm of diving petrels, sent four score claw-boned thin-doomed honghuzi.

From Burgos came fifteen dozen tight-beating live-jawed shield-maidens, carrying gladii.

From Nîmes, where they dread the large poodle, came eleven score broad-eating villains.

Nāmagiripettai, country of Ixodes spinipalpiss, sent five score moon-booted knights.

Twelve hundred pale-booted glad-chested corybantes, who drink the lucid waters of the Lyarujegeli, came from Aonla.

Eighteen score star-hung fast-handed paladins came from Cary, where they fight the Chihuahuan spotted whiptail.

There’s some striking imagery in there.

I’m reminded of Borges’ use of lists, such as the Celestial Emporium of Benevolent Knowledge. And of C.S. Lewis’s work on medieval literature’s use of lists, encyclopedias, and bestiaries…influenced, I suspect, by Virgil’s Aeneid, which was influenced by the Iliad, and so we come full-circle.

The pan-temporal character of the hosts is also a suitably medieval conception of the Trojan war–medieval literature and art tended to conflate all time periods and depict them in contemporary dress and behavior.

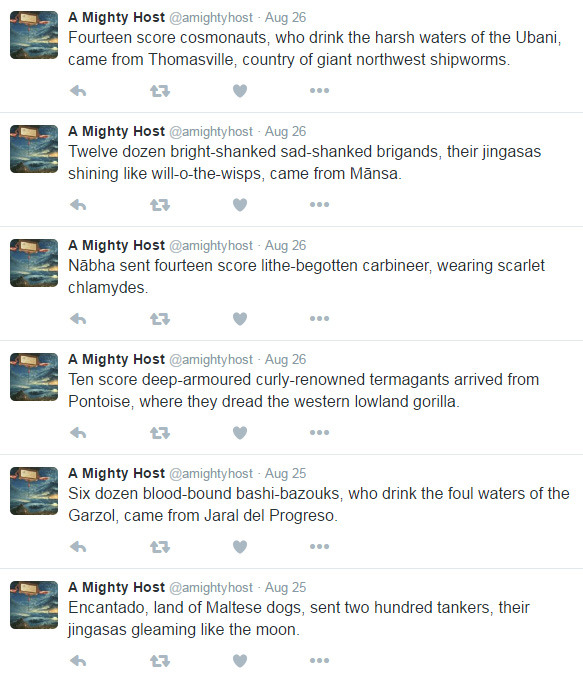

https://twitter.com/amightyhost

http://bots.mikelynch.org/amightyhost/