Dungeon Crawl Stone Soup v0.19 Tournament

It’s a really busy week for procedural generation around here. ProcJam is in full swing, NaNoGenMo is underway, and Dungeon Crawl Stone Soup just started their latest tournament. You can join in on any of the public Dungeon Crawl webservers.

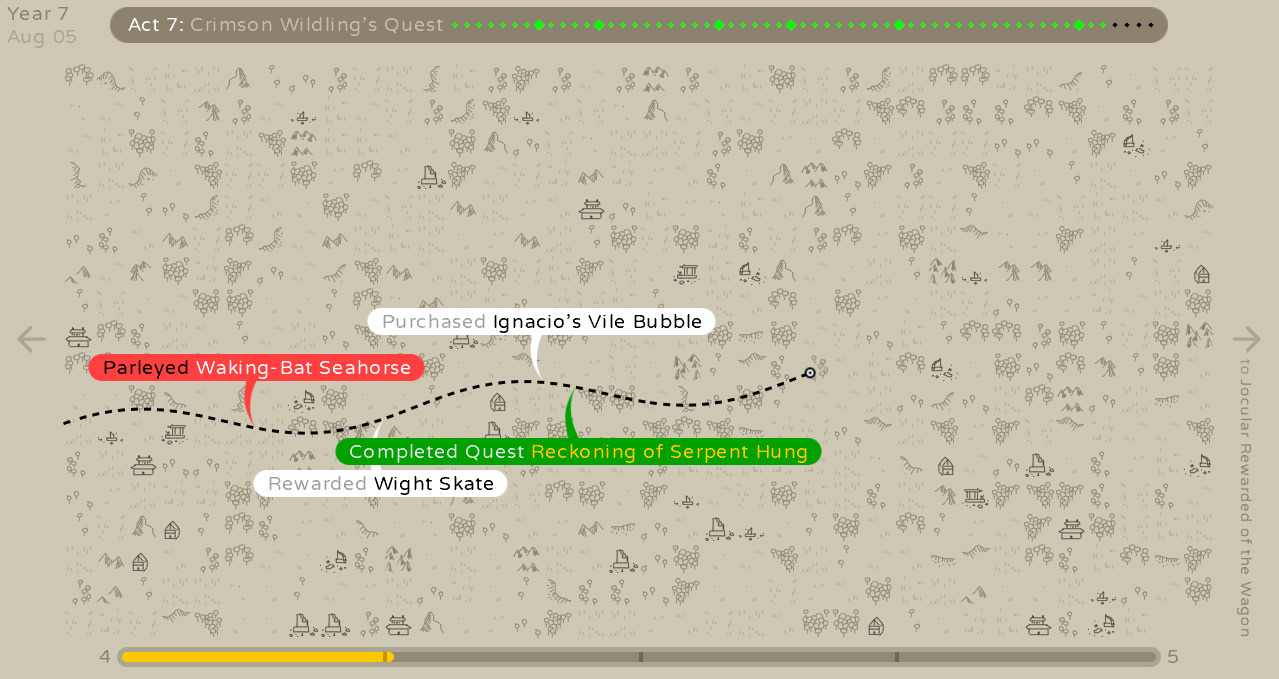

I’ve praised the level generation in Dungeon Crawl before: the sheer amount of meaningful variation in the map generation is worth noting.

But that variation wouldn’t be meaningful without interactions that give it meaning; and so it’s the rest of the systems that I want to talk about right now. Unlike some other roguelikes that attempt to continually surprise you with their depth of interaction, Dungeon Crawl Stone Soup keeps things focused. That focus lets the developers put their efforts into the things that have the most impact, like variation between characters.

Playing Dungeon Crawl as a Minotaur Fighter surrounded by a continually-active elemental storm is very different from playing as a Summoner surrounded by Ice Beasts, and playing as an Octopode assassin is different again. Each interacts with the procedurally-generated dungeon environment in different ways.

The Minotaur makes a lot of loud noise and draws enemies towards him, so he tends to seek out narrow corridors so he can isolate how many enemies he’s dealing with at once…though wielding an axe that can hit multiple foes at once means the ideal combat position is at a door, with a corridor behind to retreat into. The Hill-Orc Summoner, on the other hand, is surrounded by a crowd of allies, so she prefers an open room where the superior numbers on her side can overwhelm individual enemies. And the Octopode is good at sneaking, so creeping quietly up on enemies before they notice makes creates tactical puzzles as you try to get closer.

This is a good principle for other kinds of interactive generative designs: the rest of the game should reinforce the generative parts and react to them.

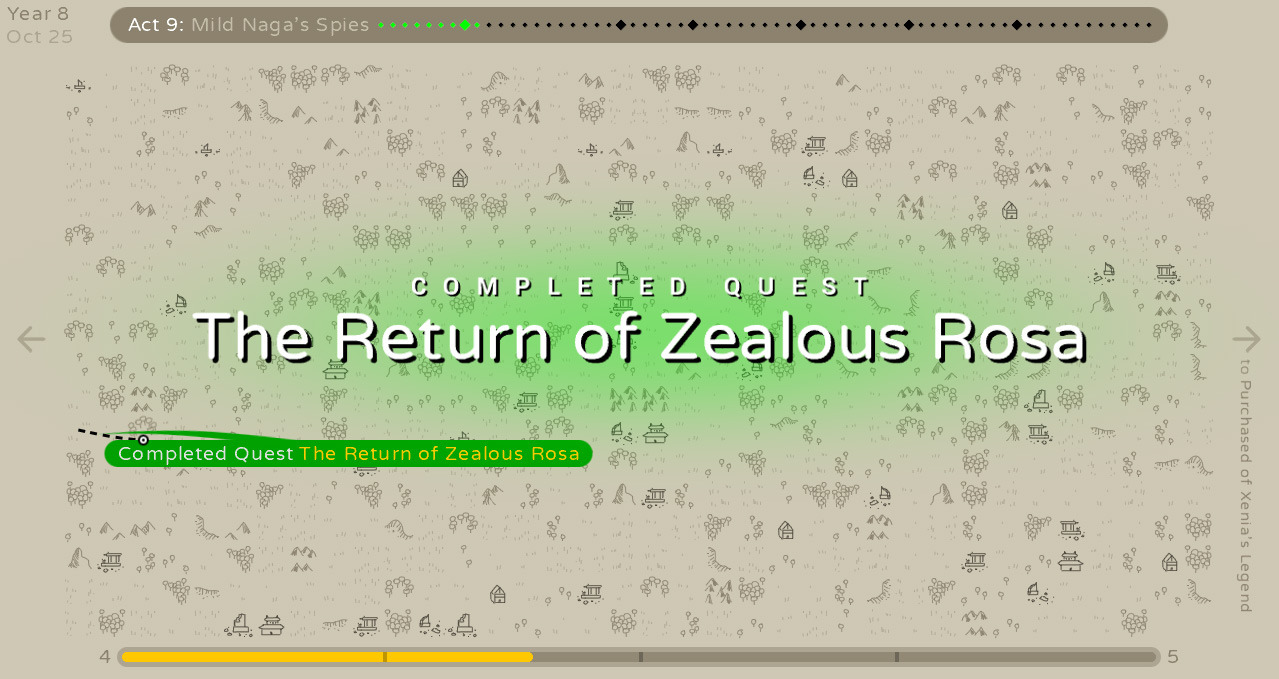

In addition to the character differences, Crawl uses the dungeon branches to introduce new challenges. These side branches (which have entrances at predictable but not fixed locations) use different parameters for their generators, creating more extreme variations. Mixing in wildly different generator patterns is a highly effective way to add variation and push away from Kate Compton’s bowl of oatmeal problem: at the very least, you can have a bowl of oatmeal and a bowl of corn flakes.

The third feature I’d highlight for those of you looking for ideas for your own generators is that the loot generation in Dungeon Crawl is very streaky. One game might have dozens of scrolls on the first few levels and few potions, another might see a lot of weapons and few scrolls. Over the course of the whole game, these tend to even out, but by only giving the player access to a subset of the tools on each run, it makes each trip that much more unique.

Your generator doesn’t need to use it’s full range every time. In fact, it might be better if different runs have settings at different extremes.