The snarXiv

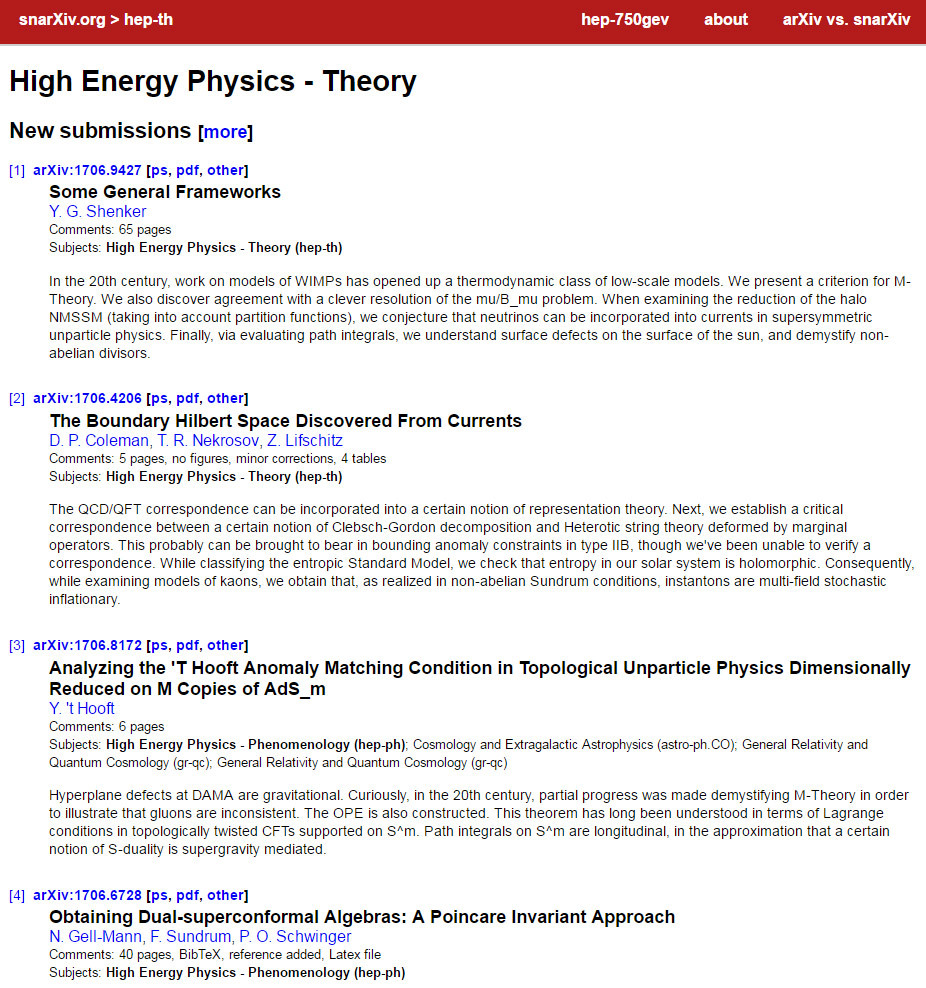

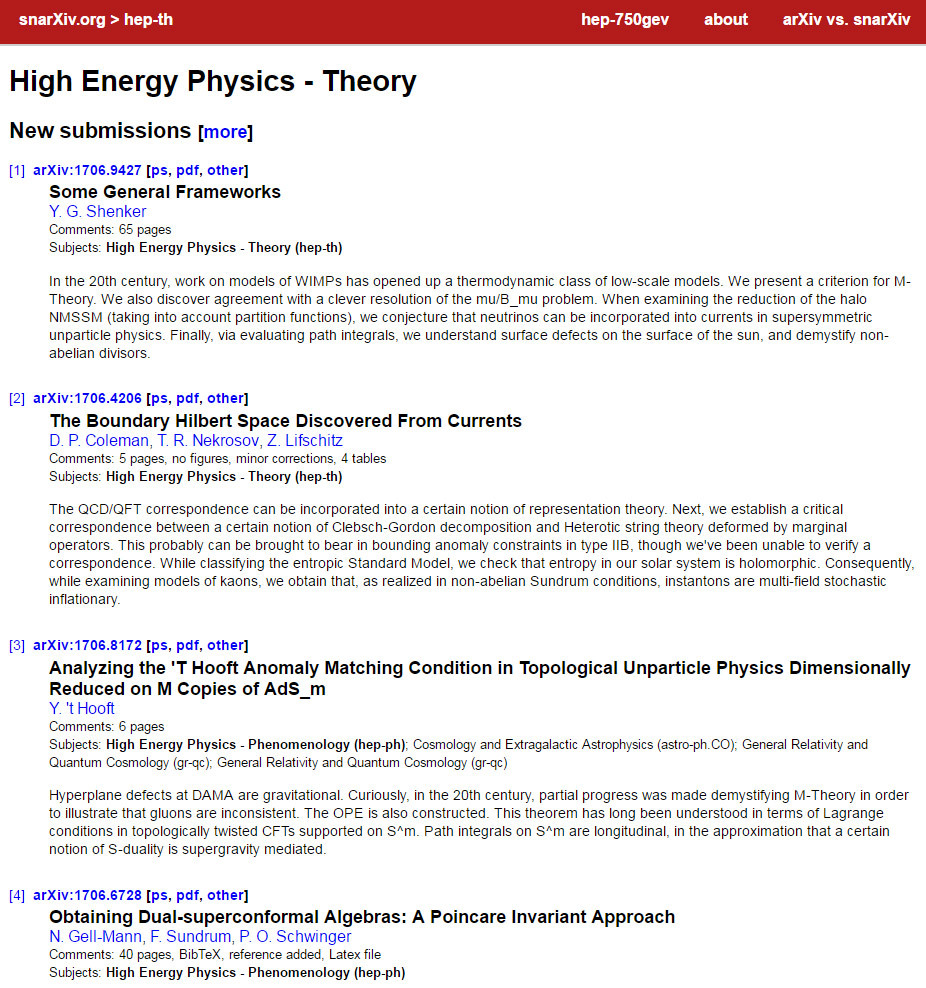

The arXiv is a site that collects preprints of scientific papers. In some fields it’s the major archive for the vast majority of the papers…but since the papers are mostly pre-publication and not yet peer-reviewed there’s also a vast variation in quality. Combined with the difficulty of understanding, say, theoretical high-energy physics if that’s not your field, and you get some confusing paper titles.

The snarXiv, on the other hand, is, well:

The snarXiv is a random high-energy

theory paper generator incorporating all the latest trends, entropic

reasoning, and exciting moduli spaces. The arXiv is similar, but occasionally less random.

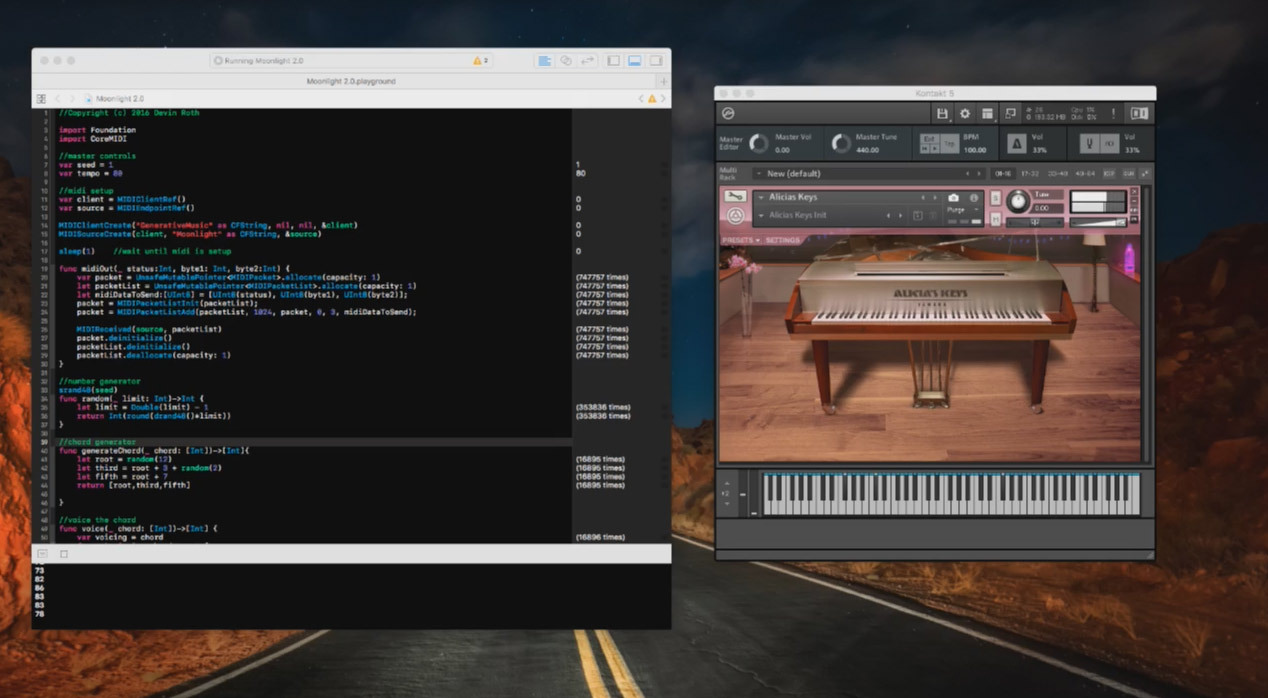

It uses a context free grammar to generate paper titles and abstracts.

I like the list of things you can do with it:

Suggested Uses for the snarXiv[3]

1. If you’re a graduate student, gloomily read through the abstracts,

thinking to yourself that you don’t understand papers on the real arXiv

any better.

2. If you’re a post-doc, reload until you find something to work on.

3. If you’re a professor, get really excited when a paper claims to

solve the hierarchy problem, the little hierarchy problem, the mu

problem, and the confinement problem. Then experience profound

disappointment.

4. If you’re a famous physicist, keep reloading until you see your name on something, then claim credit for it.

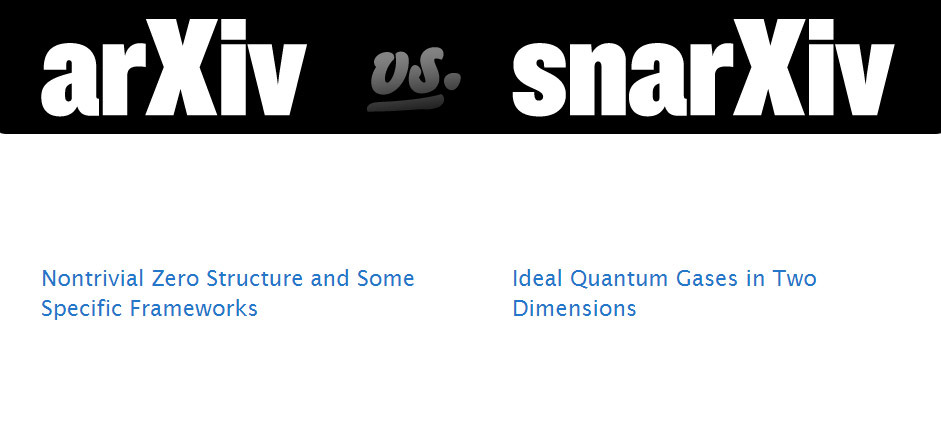

5. Everyone else should play arXiv vs. snarXiv.[4]

That last item, arXiv vs. snarXiv, shows you two paper titles and invites you to guess which one is real. The results are illuminating.

The snarXiv is far from the first scientific paper generator, and probably won’t be the last. Since the snarXiv is presently limited to high-energy physics, there are a great many other papers that are waiting for their own generators.

http://snarxiv.org/

Blogpost about how it works: http://davidsd.org/2010/03/the-snarxiv/