AI in theHunter

This article by Karin Skoog about the animal AI in theHunter has been going around the procedural generation community, and for good reason: these kind of design considerations are important for all kinds of emergent gameplay and its very useful to see them talked about in the context of a release game that uses the AI as an essential part of the experience.

It’s also a good example of something I’ve been talking about a lot lately–the systems behind the generator have meaning and need structure. The AI systems covered in the article are pretty basic behavior trees…but they’re used as part of a deliberate design. Order gives meaning to the emergence. The difference between a forgettable generator and a memorable system is often just in the way its used and the ideas that it has embedded in its structure.

As Kate Compton has said, “It’s design” – we’ve got all these fancy tools, but often the real problem is just making things with them.

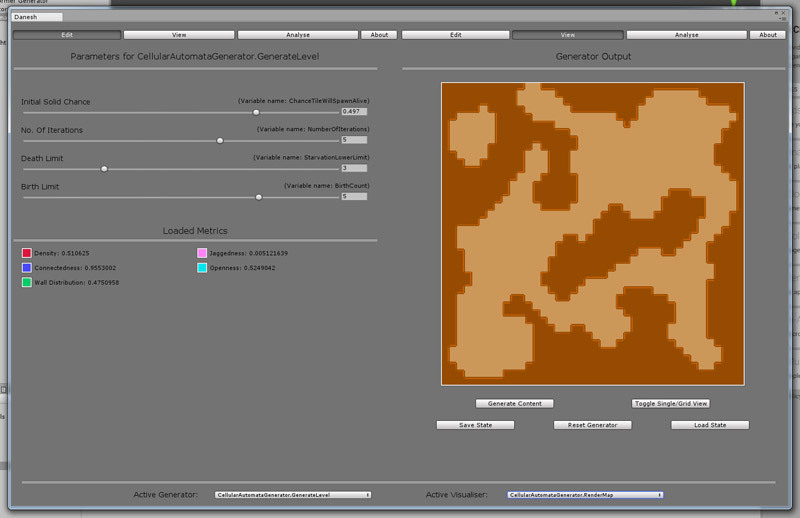

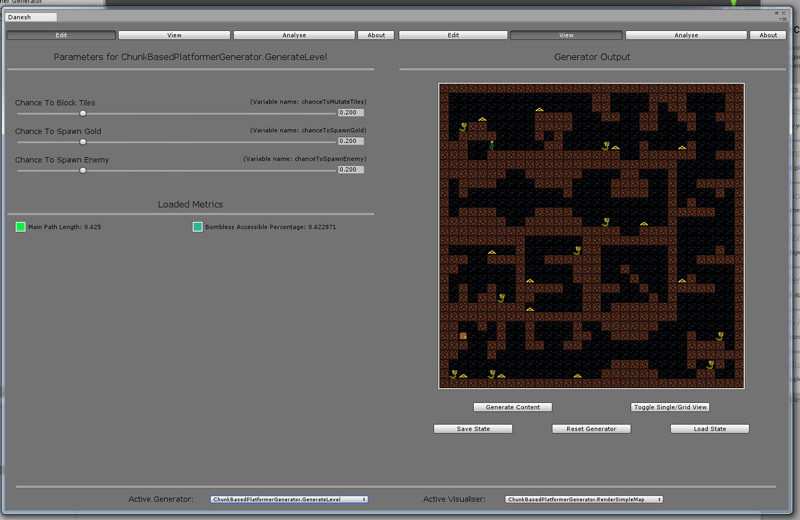

Take terrain generation, for example: throwing some Perlin noise or a fractal together is fairly easy: I can teach a novice to do it in an afternoon. Having that terrain mean something to the people who interact with it is another step altogether. How you do that is intimately dependent on what you want to say.

SimCity 2000 uses terrain generation to present its model of the importance of the physical space a city exists in: hills and rivers are important, demographics less so. Elite: Dangerous ties its terrain generation to a model of solar system formation. No Man’s Sky’s terrain expresses an aesthetic of pulp sci-fi novel covers. Minecraft uses biomes and blocks for its two-way conversation with the player.

Even with that diversity, most of those are still pretty straightforward. What about using generated space to express gameplay relationships? Or making terrain generation part of the gameplay? Or making a whole game about exploring the possibility space of the terrain generator?

One of the things that I’m very interested in is how we can use these tools to express ideas in ways that wouldn’t be possible otherwise.

http://www.karineskoog.com/thehunter-call-of-the-wild-designing-believable-simulated-animal-ai/