Training a Neural Network

This morning, I happened to check up on what the neural network I’ve been training has been up to. It’s based the NeuralEnhance code but I’m deliberately training it with paintings instead of photos so it’ll recognize brush strokes (and maybe even introduce them if I run it on a photo.

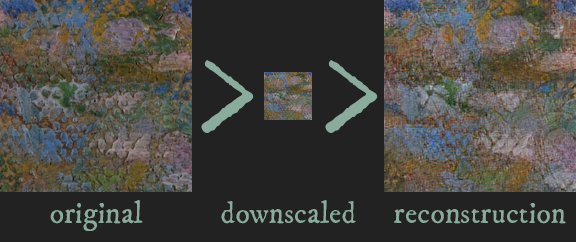

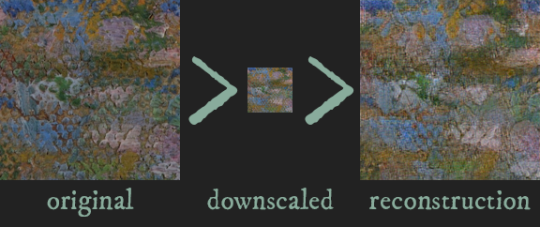

When I started training it, the early results were like this:

There’s a strong dithered checkerboard look to that reconstruction. Here, I’ll make it larger so you can see the details:

Not the best result. That checkerboarding is a common occurrence when the sampling cells overlap. I tweaked a couple settings to deal with it better, but the effective remedy proved to be just way more training.

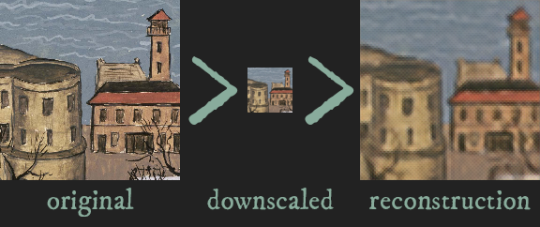

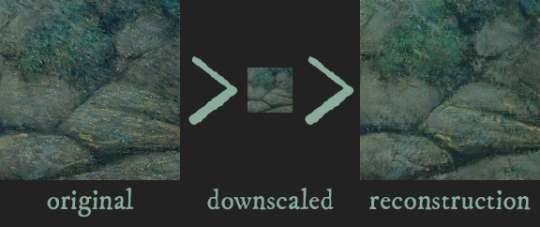

A little later on it was getting the hang of things, but could sometimes still fail pretty spectacularly on tricky inputs:

As you can see, it doesn’t really look much like the input. I figured that kind of input was probably always going to give it trouble.

Since I was running it on my spare computer I could forget it for a couple of weeks. That computer has a slower GPU, but more time to render. (And makes a really good space heater while it’s working on it.)

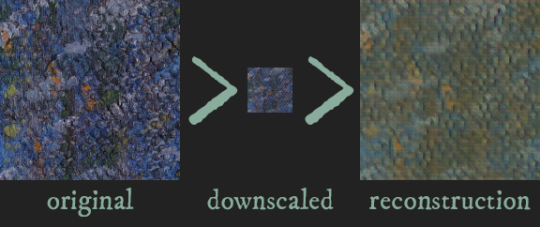

This morning, I discovered that, a few hundred generations later, it’d managed to learn how to deal with complex paint splotches like that:

Which is a pretty astonishing reconstruction.

Of course, this is still all just the training verification so far: I haven’t had time to try it out on a larger project. But the results are looking pretty promising for what I want it to be able to do.

It’s gotten pretty good at reconstructing details at different scales (because I’ve been feeding in training data at different scales). A different approach might be to give it input information about what scale the source material is at: is a given chunk a painting of an arm, or a closeup of a couple of the brushstrokes on that arm? Seems to have done pretty well without that, though.

I’m intentionally trying to train a neural net with a high degree of bias, because I want the images I resize with it to reflect that bias. Many algorithms are biased, particularly the very data-reliant neural networks. It’s like the saying about who the sucker in the poker game is: If you can’t spot the bias in the dataset, your unacknowledged biases might be reflected in it. It’s better to know how the data is biased so that you can take steps to mitigate that.

It’s also apparent how the algorithm will invent details based on how it was trained: it can’t restore the original image, it can just make its best guess.

Often that guess is really, really good. But we should remember that it is a guess, that these images are not a recording of reality in the way we usually think about photos.

Of course, as I’ve argued before, no photo deserves to be treated as real in that way. But with the existence of things like FaceApp, the historical record is going to be increasingly cluttered with algorithmically manipulated images, many of them altered without direct human intervention.

Not to mention that many lost paintings survive only as imperfect photos. What would it be like if the only surviving copy of the Mona Lisa was the Face App-altered one?