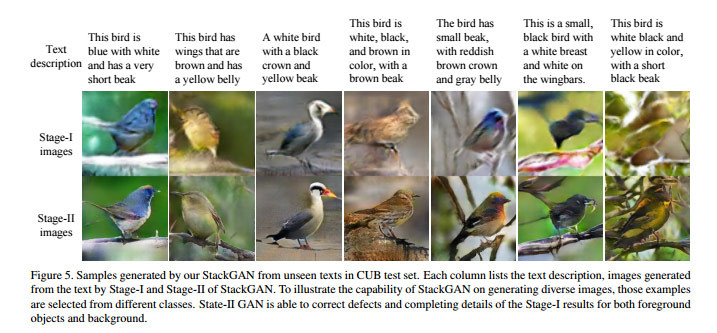

StackGAN: Text to Photo-realistic Image Synthesis

This isn’t the first text-description-to-image-generation research I’ve seen, which is a bit mind blowing to say. It does get the best-quality results that I’ve seen so far.

This research, by

Han Zhang, Tao Xu, Hongsheng Li, Shaoting Zhang, Xiaolei Huang, Xiaogang Wang, and Dimitris Metaxas, uses a stack of neural networks to go from the text input to the generated-photo output.

Since the data is vectors, it can also interpolate between different sentences: