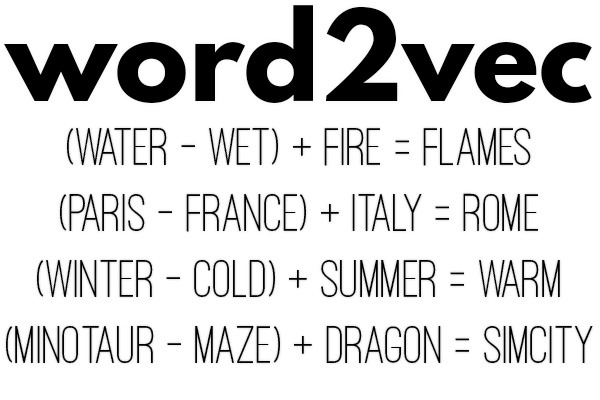

word2vec

NaNoGenMo 2016 is just around the corner, so what better time to write about text generation? In this case, it’s a tool that was invented in 2013: word2vec.

The basic concept is pretty simple: take a bunch of text and learn a vector representation for each word. Words with similar meanings have similar vectors, and more interestingly, doing math with them corresponds with some of the linguistic meanings: Paris - France + Italy = Rome.

The exact results depend on the training data, and it doesn’t always capture the exact association that a human might: with the Wikipedia corpus, (Minotaur - Maze) + Dragon gets results like “SimCity” and “Toei”, though (Minotaur - Labyrinth) + Dragon = “Dungeon”. When you’re using it for generation, it’s often a good idea to get a list of the closest vectors, and then pick the best result based on some other criteria, or weighted random sampling.

The original research paper and the code from the Google project can be found online, but there are many other implementations, such as a Python one in gensim. Here’s a post with more information and tutorials.

There’s also some in-browser implementations you can play around with. This one uses the Japanese and English Wikipedia as corpora, and this one is implemented in Javascript.