A Probabilistic Model for Component-Based Shape Synthesis

This research from 2012 by Evangelos Kalogerakis, Siddhartha Chaudhuri, Daphne Koller, and Vladlen Koltun is something I’d like to see used more.

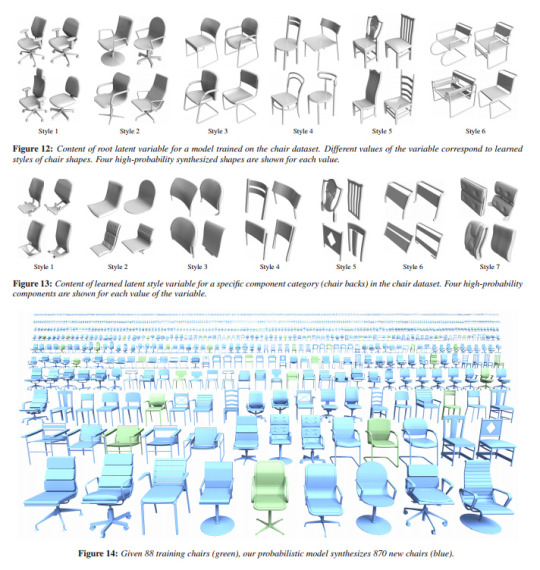

Their project synthesises new shapes based on existing models. The way it works is by analysing a set of components that go into the model and uses unsupervised learning to produce plausible new models.

I think that tools like this are going to be relatively commonplace in the future.

They won’t replace artists entirely, of course. If you need a specific chair, you’ll still need to make it by hand. And of course someone needs to create the input data, and to choose how to use the output data (though admittedly that will sometimes be another AI). But you will be able to turn a few dozen chair designs into hundreds. Today, even the biggest games can only afford to have a limited degree of variation in their assets; mixing in procedural generation to this extent brings it closer to the variation we see in the real-world.

Of course, that brings us to the question of if you want to have every chair be unique.

Already, game design and level design is dealing with this: where Thief: The Dark Project has non-interactive doors be an obvious flat texture without a handle, and the original Doom had a finite set of memorizable interactive objects, more photorealistic games are forced to find other ways to differentiate the interactive scenery.

The paper has been cited quite often, and some of those results look promising. And, given the recent rapid innovation in image synthesis, I expect that model synthesis has some very promising paths to explore. So be on the lookout for more tools that let you use procedural generation in your pipeline.