neural-doodle and Image Analogies

As an artistic process, making these neural doodles feels very much like it falls somewhere between collage and photomontage. It reminds me strongly of John Heartfield’s work, though he was working in photomontages for explicitly political ends. The way that the final image is both a seamless result but simultaneously obviously constructed (at least to the artist who did the composition) feels similar to me.

For now, the direct doodling is mostly confined to the data from one source image, making complex re-compositions more difficult. I suspect that there are some clever artistic ways to use this limitation, though I’d just as soon see some bigger images or a better way to tile the algorithm.

The results appear quite polished and inventive, until you see them directly next to the original paintings. Then the derivatives become obvious, and unless the new composition has a strong motivation the new paintings suffer from the comparison. More source images might help. Or, possibly, just a better artistic vision for the result you want and a grasp of the technique to make the doodling do what you want.

In any case, I don’t think that painters need to worry that they’re going to be replaced—although I am tempted to try my hand at repainting that van Gogh from the new composition. But that’s another form of collage.

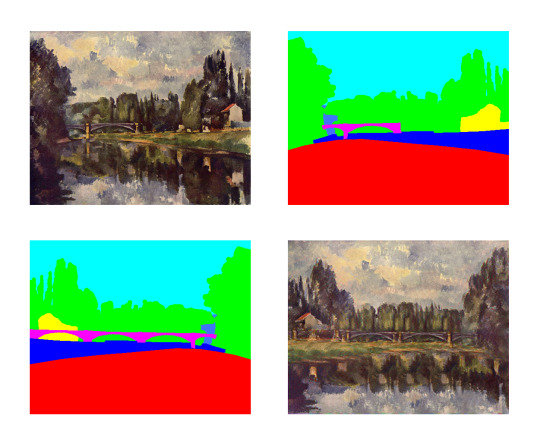

The way the neural-doodle works, to use this example based on one of Cézanne’s paintings, starts with me drawing a semantic map, which you can see in the upper-right corner there. I then drew the target semantic map, in the lower left-hand corner, to tell the algorithm what composition to aim for.

I then feed it into neural-doodle. It tries to match up the style to the new composition, using Semantic Style Transfer. As Alex argues in the paper, generating images from CNNs mostly ignores the semantic information they have collected during classification, resulting in matches between colors but not shapes or objects. Semantic Style Transfer rectifies that. For now, the semantic annotations are manually added by the user, but could conceivably be handled by an algorithm in the future.

The resulting image in the lower right-hand corner takes a bit of processing time and some careful judgement on my part as to how the semantic map should be drawn. I’m still learning the ways that the mapping can be used, and what kind of images it works best on. There’s quite a bit of potential here for artists to play with and experiment on.

I think the most in-demand skill is probably having a good eye for composition, together with a strong idea to focus the result. Without a unifying theme or focus, the generated result can’t help lacking the cohesion of the original. But with more sources of data mixed in and a strong idea to unify it, there’s potential for an artist of the future to find.

One thing I want to see is to have more artists feed their personal work into the algorithm and control it from both ends. What happens when you’ve created both the image and the output? What kind of source images should you draw to get the results you want to see?

Meanwhile, as we wait for the centaurs to overrun civilization, it seems like there’s room to explore the implications of the artistic movements this enables.