Generating scenes of Friends with a Nueral Network by Andy Pandy

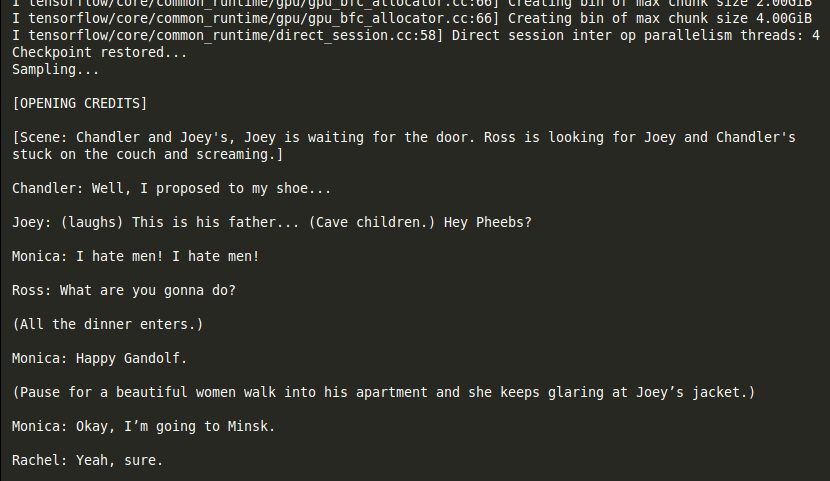

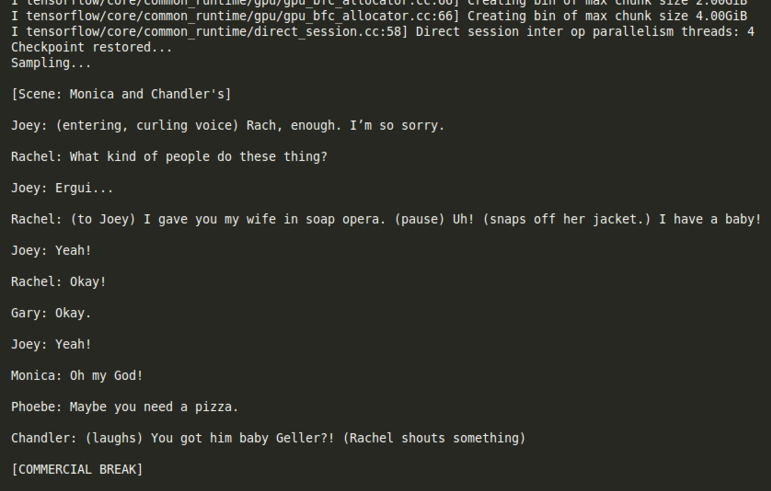

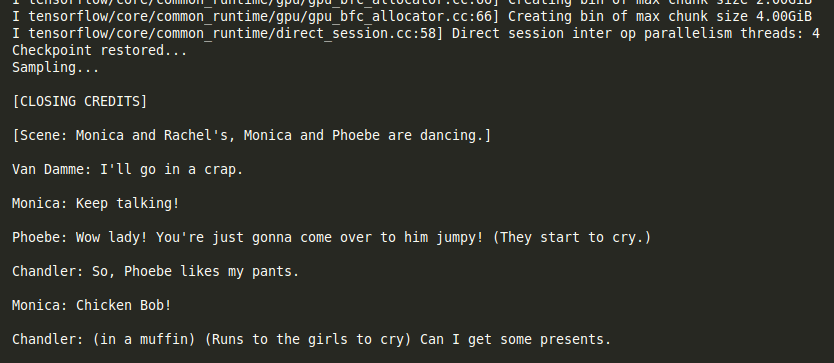

Andy has fed scripts for every scene of Friends into a Recurrent Neural Network and it learnt to generate new scenes. It has scripted some interesting events like Monica wishing the others ‘Happy Gandolf’ just after (All the dinner enters.)

Someone should take this further by using Sam Lavigne’s Videogrep to edit existing Friends footage into the scenes created by the RNN. Then I’ll post them on algopop ;)

I’m very fond of the results that recurrent neural networks are producing. They have their limitations, especially since they have to learn what little they know about language from scratch. On the other hand, it’s a computer algorithm that can learn enough about language to write semi-intelligible results from scratch! And also Magic: the Gathering Cards!

I especially like the suggestion to tie it into other algorithms to take the results to the next level. Combining procedural generation algorithms with each other and with different kinds of input is a key way to increase the new-information ratio. A generator with high entropy and a high signal is the dream.

Roughly, the next information the generator produces should be unexpected but meaningful, which can be a tricky line to walk. One way to do it is to take advantage of the way we get pleasure from realizing that a plot was predictable in hindsight but surprising in the moment. But twists aren’t the only method: there are other ways to get the same newness-with-meaning.

This particular result, posted on twitter by @_Pandy uses a constraint of the generator to its advantage. RNNs work better when the text that it’s trained on follows the same general structure, so that it can focus on learning commonalities. It tends towards averages, so it doesn’t work as well when the average result falls between two stools. By choosing the scripts for a single television show, it gives the result context and keeps the training data focused.

The context of a known TV show makes it easier to imagine the nonsense scenes happening. The focused training data is going to learn what the characters on the show are named, and the formatting of the script, so it takes much less time to produce an intelligible result.