sketch-rnn

Reversing recognition neural networks to generate content has been a big theme in 2015. The detailed blogging by hardmaru about different experiments has been one of the highlights of the trend.

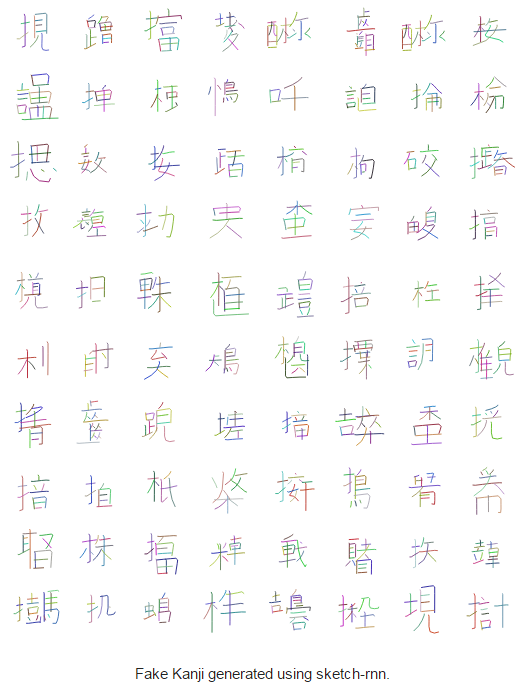

The latest experiment is to use a recurrent neural net to make fake Chinese characters. Unlike hardmaru, I’m not a native speaker, so I can’t evaluate them for effectiveness. Native speakers seem to find them fairly convincing, though.

But there’s a deeper thing going on here: the reason the hardmaru was interested in this approach in the first place is that, unlike most other recent generators that work on pixels, this is an inherently vector-based technique.

Generating something that is more naturally represented with vectors gives two distinct advantages.

First, the machine’s representation of the data more closely corresponds to the thing it is trying to represent. The ordered strokes of the vectors are much more meaningful than any pixel representation of them can be.

Second, it makes it easier to keep close to an ordered result, where the chaos that is added is more meaningful because it naturally conforms to the rules that are creating the underlying structure.

It also opens the possibility of teaching an AI to draw. Indeed, hardmaru suggested the TU Berlin Sketch Database might be a possible future source data.