Nick Montfort’s 1K Story Generators (2008)

Now that NaNoGenMo is done, let’s jump back in time a bit to talk about some pre-NaNoGenMo story generators.

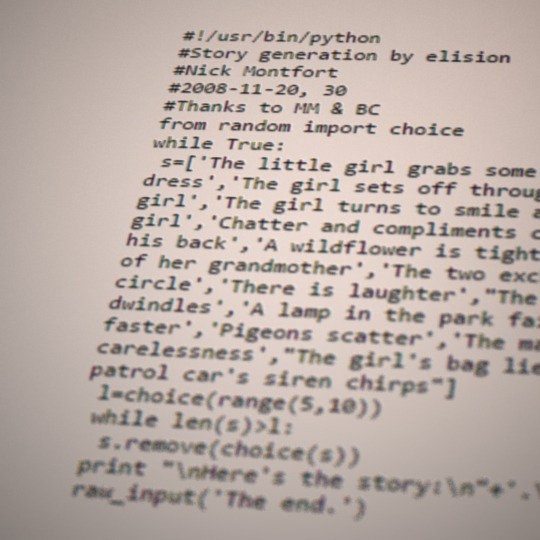

Nick Montfort has been talking about interactive fiction and creative computing for quite some time. (He’s currently an associate professor of digital media at MIT.) In 2008, he wrote a tiny little story generator that worked by elision: taking a list of sentences, removing some of them, and presenting the remaining ones. He followed it up with a couple of others that used similar processes.

Here’s one output from story3.py (with sentences written by Beth Cardier):

Here’s the story:

It’s Tommy’s birthday.

Mother steps away to answer the phone.

The dog leaps onto the counter.

Rufus grabs the prize.

Tommy chases Rufus.

A truck hums along.

Rufus wags his tail.

A lawnmower sputters.

Tommy runs outside holding Rufus.

A cloud darkens the sky.

The end.

I tend to think that the elision (and addition, in this one) is a surprisingly effective way to take advantage of the human tendency for pareidolia and apophenia. It’s not computationally complicated (you could implement these generators with playing cards) but it doesn’t have to be.

It also gives me an opportunity to talk about the distinction between one instance of the story, and all the possible stories that the generator can produce. I get one sense for the first story I read from one of these generators, and a somewhat different sense once I’ve read enough (or understood the source code) to have some idea about the entire story space.

What it comes down to, I think, is whether you consider what a story says to be true for all of the stories, or only for the particular story that you are reading. Here’s another story from the same generator:

Here’s the story:

It’s Tommy’s birthday.

Tommy loves salmon.

Mother screams.

The sky is clear and blue.

Tommy cries.

Mother kicks Rufus.

A bicycle bell rings.

Tommy runs outside holding Rufus.

The newspaper slaps the front door.

The end.

This time, Rufus is innocent. (Indeed, we only know that Rufus is a dog if we read the first story.) Tommy’s life is a lot darker, here.

So, are these separate stories, or two versions of the same story? Do we think of them as different views of the same events, or totally unconnected stories? Or both at the same time? Is Rufus always a dog? Are we reading the individual story, or the metastory that’s made up of all of the possible stories? I don’t know that there needs to be one set answer for these questions, but I also don’t have a good way to distinguish them yet.

Rhizomic, labyrinthine works are intrinsic to the cybertextual form, but I don’t feel like the popular understanding of digital storytelling has a thorough understanding of them. I know I’d like to understand it better myself.

(If you’re interested in these questions, you might want to consider Sam Barlow’s Aisle as another example of a rhizomic story. Though there are many, many other precedents.)

The other reason I like these generators is that they’re simple enough to make that writers who don’t know how to program can still create their own. Take a stack of index card, number them, and write down a sentence on each one. Now, shuffle the cards, remove a bunch, and then put the remaining ones down in order. Instant non-digital story generator, with lots of room to experiment.

If you’re interested in playing with procedurally generated storytelling, this is an excellent place to begin. Making one as an exercise will teach you a lot about some of the kinds of writing that that works for procedural generation.

Meanwhile, I’ll leave you with these stories from story2.py:

Here’s the story:

The police officer nears the alleged perpetrator.

He hugs her.

Each one learns something.

The end.Here’s the story:

The babysitter approaches the child.

She defies him.

They feel better after a good cry.

The end.

https://grandtextauto.soe.ucsc.edu/2008/11/30/three-1k-story-generators/